Category: MySQL

SQL:2023 and how well MySQL and MariaDB comply

SQL:2023 is now the official SQL standard. I’ll compare some of the new optional features with what MySQL and MariaDB support already, and say whether I judge they are compliant.

At the moment I don’t have draft documents (my membership on the standard committee ended long ago), so I gratefully get my news from Oracle and Peter Eisentraut. It’s early days, I may be making mistakes and may be guessing wrong about what new features are important.

LPAD and RPAD

MySQL and MariaDB examples:

SELECT LPAD('hi',3,'?'); causes '?hi'.

SELECT RPAD('hi',3,'?'); causes 'hi?'.

Judgment: compliant with SQL:2023 optional feature T055.

The third argument is optional and default is the space character. For some reason I’m not seeing the documentation in the manuals, but it is standard behaviour.

LTRIM and RTRIM and BTRIM

MySQL and MariaDB examples:

SELECT LTRIM(' *'); -- causes '*'.

SELECT RTRIM('* '); -- causes '*'.

Judgment: not compliant with SQL:2023 optional feature T056.

Although SQL:2023 now has LTRIM and RTRIM, they are two-argument,

perhaps a bit like Oracle.

Also SQL:2023 has BTRIM for trimming from both ends. Although MySQL and MariaDB have TRIM(BOTH …), it’s not quite the same thing.

VARCHAR instead of VARCHAR(length)

MySQL and MariaDB example:

CREATE TABLE t(s1 VARCHAR(1)); -- (length) is mandatory

Judgment: not compliant with SQL:2023 optional feature T081

A DBMS that supports feature T081 doesn’t have to include a maximum length, it’s up to the implementation, which in this situation could be 65535 bytes. This is unfortunate and it might be my fault, I think I used to insist that length should be mandatory in MySQL.

Although I can use the TEXT data type instead for indefinite-length strings, it has different characteristics.

ANY_VALUE, emphasizing use with GROUP BY

MySQL and MariaDB example:

SELECT s1, s2 FROM t GROUP BY s1;

Judgment: compliant with SQL:2023 optional feature T626, almost, alas

Probably you noticed that s2 is not in the GROUP BY clause, and yet the syntax is legal — any of the original t values could appear.

The standard would yield something similar with different syntax: SELECT s1, ANY_VALUE(s2) FROM t GROUP BY s1;

Or, as Oracle describes it, “[ANY_VALUE] eliminates the necessity to specify every column as part of the GROUP BY clause”.

That means it’s non-deterministic. Usually I’ve cared more about non-standardness. But now that argument is weaker.

ORDER BY, emphasizing use with GROUP BY

MySQL and MariaDB example:

CREATE TABLE t (s1 int, s2 int, UNIQUE (s2)); INSERT INTO t VALUES (1,1),(7,2),(5,3); SELECT s1 FROM t GROUP BY s1 ORDER BY s2;

Judgment: not compliant with SQL:2023 optional feature F868.

I’m probably misunderstanding something here about the intent, but the effect is that something that’s not in the GROUP BY clause can determine the ordering. In this case, because column s3 is unique, it’s maybe not utterly absurd. But in MySQL and MariaDB, the result is not guaranteed to be in order by column s3.

GREATEST and LEAST

MySQL and MariaDB syntax:

SELECT GREATEST(2,1,3); -- result = 3 SELECT LEAST(2,1,3); -- result = 1

Judgment: compliant with SQL:2023 optional feature T054

UNIQUE NULLS DISTINCT

MySQL and MariaDB example:

CREATE TABLE t (s1 INT, UNIQUE(s1)); INSERT INTO t VALUES (NULL),(NULL);

Judgment: partly compliant with SQL:2023 feature F292 unique null treatment

To understand this, your required reading is my old blog post

NULL and UNIQUE.

You have to read the whole thing to understand that the

standard document was not contradictory, and that MySQL

and MariaDB have always been compliant, because …

In a UNIQUE column, you can insert NULLs multiple times.

However, because lots of people never understood, there are implementations where you cannot do so. Therefore feature F292 allows new syntax:

CREATE TABLE t (s1 INT, UNIQUE NULLS DISTINCT (s1)); or CREATE TABLE t (s1 INT, UNIQUE NULLS NOT DISTINCT (s1));

MySQL and MariaDB could easily half-comply by allowing the first option and saying it is the default. (The standard now says the default is implementation-defined.) (A backward step.)

Hexadecimal and octal and binary integer literals

MySQL and MariaDB example:

SELECT 0x4141;

Judgment: not compliant with SQL:2023 optional feature T661

Did you notice that I said “integer literals”? That’s what’s wrong, 0x4141 in standard SQL is now supposed to be an integer, decimal 16705. But MySQL and MariaDB will treat it as a string, ‘AA’.

It is always legal for an implementation to have “non-conforming” syntax that causes “implementation-dependent” results. That’s what happened with 0x4141, in the mists of time. But now, if one decides to “conform” with the syntax of this optional feature, one must change the behaviour drastically: not merely a different value but a different data type.

I suppose that the road to compliance would involve deprecations, warnings, sql_mode changes, etc., lasting years.

Underscore digit separators

MySQL and MariaDB example:

CREATE TABLE t (10_000_000 INT); INSERT INTO t VALUES (1); SELECT 10_000_000;

Judgment: not compliant with SQL:2023 optional feature T662 Underscores in numeric literals

Big numbers like 10,000,000 cannot be expressed in SQL using commas because that looks like three separate values. The solution, which I see was implemented a few years ago in Python, is to express 10,000,000 as 10_000_000 — that is, underscores instead of commas.

In standard SQL, an identifier cannot begin with a digit. But in MySQL and MariaDB, it can, so 10_000_000 is a legal regular identifier. This is another example of an extension that causes trouble in a long term.

There is an idiom about this, usually about chickens: “come home to roost”. As Cambridge Dictionary puts it: “If a past action, mistake, etc. comes home to roost, it causes problems at a later date, especially when this is expected or seems deserved …”

JSON

MariaDB example:

CREATE TABLE t (s1 JSON);

Judgment: partial compliance with SQL:2023 feature T801 JSON data type etc.

I’m not going to try to analyze all the new JSON options because it’s a big subject. Also there’s a CYCLE clause and a new section of the standard, part 16 Property Graph Queries (SQL/PGQ), which I don’t think is appropriate to get into during this comparison of features in the foundation. Maybe some other time.

Score

Actually, despite pointing to some non-compliance, I have to give credit to the people who decided to implement some features long before they became standard. (And I don’t credit myself at all because they appeared before I was a MySQL architect, and in fact I objected to some of them while I was with MySQL AB.)

Well done.

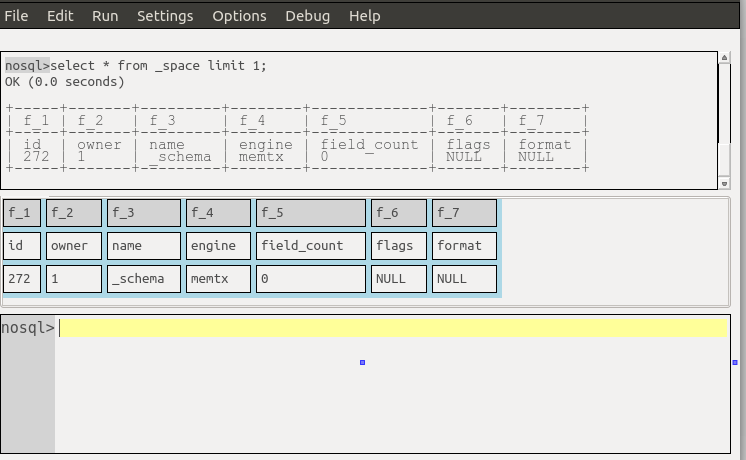

ocelotgui news

The new version of the Ocelot GUI application, ocelotgui, is 2.0. As always the source and executable files are on github. The biggest new feature is charts. I’ll say more in a later blog post.

Update added 2023-06-12: Re ANY_VALUE: see comment by Øystein Grøvlen. As for the question from Federico Razzoli, I cannot give an authoritative answer. Also I’ve been reminded that MariaDB request MDEV-10426 regarding ANY_VALUE is marked as part of MariaDB 11.2 flow, but until then I guess MySQL is a bit ahead.

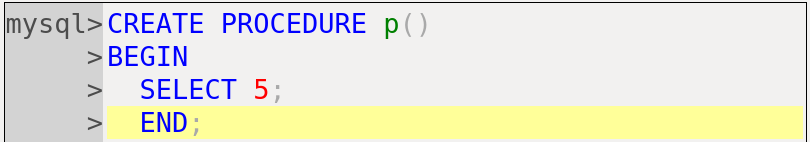

No more mysql.proc in MySQL 8.0

MySQL has thrown away the mysql.proc table for version 8.0 Development Releases.

The best explanation that I’ve seen is the one that Dmitry Lenev gave at a conference last October.

To summarize it: mysql.proc and its ilk are non-transactional, redundancy is bad, and MySQL can fix some known bugs by moving over to information_schema tables backed by InnoDB. Of course I approve for a separate reason: mysql.proc is non-standard and therefore it is a mistake.

On the other hand, programmers that have invested some time in using mysql.proc will have some trouble changing them to use information_schema.routines instead.

Table definition differences

I did a complex left join of the information_schema.columns for

mysql.proc (P) and for information_schema.routines (R) in MySQL 5.7, and saw this.

| P_column_name | P_column_type | P_collation_name | R_column_name | R_column_type | R_collation_name | |

|---|---|---|---|---|---|---|

| db | char(64) | utf8_bin | ROUTINE_SCHEMA | varchar(64) | utf8_general_ci | |

| name | char(64) | utf8_general_ci | ROUTINE_NAME | varchar(64) | utf8_general_ci | |

| type | enum(‘FUNCTION’,’PRO | utf8_general_ci | ROUTINE_TYPE | varchar(9) | utf8_general_ci | |

| specific_name | char(64) | utf8_general_ci | SPECIFIC_NAME | varchar(64) | utf8_general_ci | |

| language | enum(‘SQL’) | utf8_general_ci | EXTERNAL_LANGUAGE | varchar(64) | utf8_general_ci | |

| sql_data_access | enum(‘CONTAINS_SQL’, | utf8_general_ci | SQL_DATA_ACCESS | varchar(64) | utf8_general_ci | |

| is_deterministic | enum(‘YES’,’NO’) | utf8_general_ci | IS_DETERMINISTIC | varchar(3) | utf8_general_ci | |

| security_type | enum(‘INVOKER’,’DEFI | utf8_general_ci | SECURITY_TYPE | varchar(7) | utf8_general_ci | |

| param_list | blob | NULL | NULL | NULL | NULL | |

| returns | longblob | NULL | NULL | NULL | NULL | |

| body | longblob | NULL | NULL | NULL | NULL | |

| definer | char(77) | utf8_bin | DEFINER | varchar(77) | utf8_general_ci | |

| created | timestamp | NULL | CREATED | datetime | NULL | |

| modified | timestamp | NULL | LAST_ALTERED | datetime | NULL | |

| sql_mode | set(‘REAL_AS_FLOAT’, | utf8_general_ci | SQL_MODE | varchar(8192) | utf8_general_ci | |

| comment | text | utf8_bin | ROUTINE_COMMENT | longtext | utf8_general_ci | |

| character_set_client | char(32) | utf8_bin | CHARACTER_SET_CLIENT | varchar(32) | utf8_general_ci | |

| collation_connection | char(32) | utf8_bin | COLLATION_CONNECTION | varchar(32) | utf8_general_ci | |

| db_collation | char(32) | utf8_bin | DATABASE_COLLATION | varchar(32) | utf8_general_ci | |

| body_utf8 | longblob | NULL | ROUTINE_DEFINITION | longtext | utf8_general_ci |

Remember the above chart is for MySQL version 5.7.

For MySQL 8.0.2 these column definitions have changed:

ROUTINE_SCHEMA: was varchar(64) utf8_general_ci, will be varchar(64) utf8_tolower_ci

ROUTINE_TYPE: was varchar(9) utf8_general_ci, will be enum

DATA_TYPE: was varchar(9), will be longtext

EXTERNAL_LANGUAGE: was varchar(64), will be binary(0)

SQL_DATA_ACCESS: was varchar(64), will be enum

SECURITY_TYPE: was varchar(7), will be enum

CREATED: was datetime, will be timestamp

LAST_ALTERED: was datetime, will be timestamp

DEFINER: was varchar(77) utf8_general_ci, will be varchar(93) utf8_bin

CHARACTER_SET_CLIENT: was varchar(32), will be varchar(64)

COLLATION_CONNECTION: was varchar(32), will be varchar(64)

DATABASE_COLLATION: was varchar(32), will be varchar(64)

… and more changes are possible.

I have included the dirt about column data type and collation so that it’s clear they are never exactly the same. This might affect applications that depend on exact size allocations and precise ordering. But usually it will make no difference to either programmers or end users.

What the chart shows is that mysql.proc.db corresponds to information_schema.routines.ROUTINE_SCHEMA, mysql.proc.name corresponds to information_schema.routines.ROUTINE_NAME, and so on. So if I had a simple SQL statement like

SELECT db, name FROM mysql.proc;

I could convert with ease to

SELECT ROUTINE_SCHEMA, ROUTINE_NAME FROM information_schema.routines;

(By the way I used name rather than specific_name because it’s in the primary key; the value is the same.)

However, three mysql.proc columns — param_list, returns, body — have no corresponding columns in information_schema.routines. Converting them will be more work.

param_list and returns

Let’s try

CREATE FUNCTION fx1(paramx1 INT, paramx2 DOUBLE) RETURNS CHAR(5) RETURN 'xxxxx';

Let’s look at it via mysql.proc (whenever I show mysql.proc I’m using MySQL 5.7):

SELECT param_list, returns FROM mysql.proc WHERE name='fx1';

Result:

+-------------+------------------------+ | param_list | returns | +-------------+------------------------+ | paramx1 int | char(5) CHARSET latin1 | +-------------+------------------------+

Now let’s look at it with

SELECT DATA_TYPE, CHARACTER_MAXIMUM_LENGTH, CHARACTER_SET_NAME, DTD_IDENTIFIER FROM information_schema.routines WHERE routine_name='fx1';

Result:

+-----------+--------------------------+--------------------+----------------+ | DATA_TYPE | CHARACTER_MAXIMUM_LENGTH | CHARACTER_SET_NAME | DTD_IDENTIFIER | +-----------+--------------------------+--------------------+----------------+ | char | 5 | latin1 | char(5) | +-----------+--------------------------+--------------------+----------------+

This isn’t too bad — all we have to do, (with sql_mode=’pipes_as_concat’) is concatenate

DATA_TYPE || ‘(‘ || CHARACTER_MAXIMUM_LENGTH || ‘)’ || ‘ CHARSET || CHARACTER_SET_NAME

or, even simpler,

DTD_IDENTIFIER || ‘ CHARSET ‘ || CHARACTER_SET_NAME

and we’ve got “char(5) CHARSET latin1”, the same as what’s in mysql.proc.returns. Using DTD_IDENTIFIER avoids complications with other data types so I’ll always go with it.

It’s trickier to find a substitute for param_list, because parameters aren’t in information_schema.routines at all. We’d find them by saying

SELECT ORDINAL_POSITION, PARAMETER_MODE, PARAMETER_NAME, DTD_IDENTIFIER FROM information_schema.parameters WHERE specific_name = 'fx1'; +------------------+----------------+----------------+----------------+ | ORDINAL_POSITION | PARAMETER_MODE | PARAMETER_NAME | DTD_IDENTIFIER | +------------------+----------------+----------------+----------------+ | 0 | NULL | NULL | char(5) | | 1 | IN | paramx1 | int(11) | | 2 | IN | paramx2 | double | +------------------+----------------+----------------+----------------+

We don’t need to do anything with parameter #0 (it’s just a copy of what’s in information_schema.routines.returns); we only need to merge parameter #1 and parameter #2 into the main query. Like this (with sql_mode=’pipes_as_concat’), but skipping the details we’ve already seen:

SELECT routine_name, routine_body,

(SELECT group_concat(parameter_name || ' ' || dtd_identifier)

FROM information_schema.parameters p

WHERE p.specific_name = outertable.routine_name

AND ordinal_position > 0)

AS param_list

FROM information_schema.routines outertable

WHERE routine_name = 'fx1';

Result:

+--------------+--------------+--------------------------------+ | ROUTINE_NAME | ROUTINE_BODY | param_list | +--------------+--------------+--------------------------------+ | fx1 | SQL | paramx1 int(11),paramx2 double | +--------------+--------------+--------------------------------+

In other words, we can get param_list from information_schema.routines by adding a subquery that accesses information_schema.parameters. Notice the assumption that the list will be ordered, I’m depending on a quirk.

body

Let’s try:

CREATE PROCEDURE px1() SELECT _latin1 0xe3; SELECT body, body_utf8, _latin1 0xe3 FROM mysql.proc WHERE name = 'px1';

Result:

+---------------------+--------------+--------------+ | body | body_utf8 | _latin1 0xe3 | +---------------------+--------------+--------------+ | SELECT _latin1 0xe3 | SELECT 0xe3 | ã | +---------------------+--------------+--------------+

Now let’s try:

CREATE PROCEDURE px2() SELECT 'abc''def'; SELECT body, body_utf8 FROM mysql.proc WHERE name = 'px2';

Result:

+-------------------+------------------+ | body | body_utf8 | +-------------------+------------------+ | SELECT 'abc''def' | SELECT 'abc'def' | +-------------------+------------------+

So you can see that body and body_utf8 are different. In a sense, both are correct — body is what you want if you are going to make a copy of the routine, body_utf8 is what you want if you want to see what the output would look like if you invoked the routine. So it’s pretty useful that mysql.proc has both.

Unfortunately, information_schema.routines does not. It has no equivalent of body. It only has an equivalent of body_utf8.

Loyal readers may recall that I’ve talked before about the possible information losses when making everything UTF8 but this is even worse. Without an equivalent of mysql.proc.body, you cannot reliably make exact copies of routines even if they are in UTF8.

Privilege differences

Actually the change amounts to more than just the differences between the definitions of the tables. There’s also the fact that PRIVILEGES are different — you can GRANT on mysql.* tables, you cannot GRANT on information_schema.* tables. And what users can see differs depending on how granting is done.

Therefore, for all users who currently hold a SELECT privilege on mysql.proc, we are going to have to work around the problem that there is no way to grant the exact same privilege on information_schema.routines. In other words, if the old (MySQL 5.7) statement was

GRANT SELECT ON mysql.proc TO 'jean'@'localhost';

To be the same as that, you need a way to let jean see all the columns in all the routines, but not anything other than the routines. This is possible with the DEFINER clause in routines and views. For example, assuming ‘root’@’localhost’ is a powerful user:

CREATE DEFINER = 'root'@'localhost' SQL SECURITY DEFINER VIEW v_routines AS SELECT * FROM information_schema.routines; GRANT SELECT ON v_routines TO 'jean'@'localhost';

SHOW

Quote from MySQL 5.7 manual describing SHOW CREATE PROCEDURE and SHOW CREATE FUNCTION:

“To use either statement, you must be the user named in the routine DEFINER clause or have SELECT access to the mysql.proc table.”

Quote from MySQL 8.0 manual describing SHOW CREATE PROCEDURE and SHOW CREATE FUNCTION:

“To use either statement, you must have the global SELECT privilege.”

Essentially, the privilege requirement in 5.7 is what’s needed for looking at mysql.proc, but the privilege requirement in 8.0 is what’s needed for looking at information_schema.routines.

But this time we can’t work around by creating a view. If I’m interpreting “global SELECT privilege” correctly, the expectation is that if you want to say SHOW CREATE PROCEDURE, you need

GRANT SELECT ON *.* TO user_who_wants_to_show_create_procedure;

Either I’m misinterpreting, or MySQL is demanding that you grant a very broad privilege for a very narrow requirement.

This is too bad because, though SHOW statements are junk, this one will be necessary in MySQL 8.0. That’s because it has the body value right: it does not turn SELECT ‘abc”def’ into SELECT ‘abc’def’ and so on. Thus, it is the only way to get the equivalent of MySQL 5.7’s mysql.proc.body value. Using a connector, you can put this correct value into another table with something like this, leaving out details:

mysql_real_query("SHOW PROCEDURE p;")

mysql_fetch_row() /* there's only going to be one row at most */

mysql_fetch_field() /* the third field is `Create procedure` */

mysql_real_query("UPDATE copy_of_routines") /* set body value */

Other ways to get lists of routines

Although MySQL 8.0 is missing mysql.proc, it isn’t missing all the grant-related tables (yet). So you can still say

SELECT * FROM mysql.procs_priv;

Not only that, you can still use SHOW PROCEDURE STATUS — and then put the results in a @variable! As far as I know this is undocumented, but it’s been around for years and nobody has bothered to disallow it.

Try this:

SET @procedurelist = ''; SHOW PROCEDURE STATUS WHERE (@procedurelist := CONCAT(@procedurelist, `Name`, ',')); SELECT @procedurelist;

Result:

+----------------------------------------------------- ... | create_synonym_db,diagnostics,execute_prepared_stmt, ... +----------------------------------------------------- ...

Demonstrably, the @procedurelist variable now has a list of all procedures. Its only recommended use is to show unbelievers that with MySQL all things are possible.

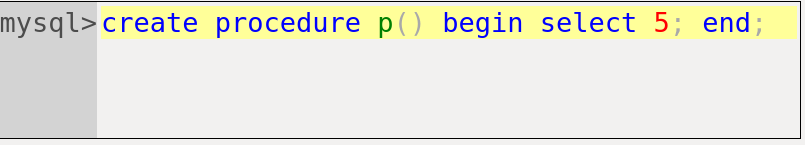

Effect on ocelotgui debugger

As you might have guessed by now, we have a program that uses mysql.proc, namely the Ocelot open source GUI client for MySQL and MariaDB (ocelotgui). Well, one of its feature components is a debugger for MySQL stored routines, and you can see all the “mysql.proc” references in our source code for that module.

Our plan is: wait and see if MySQL makes the transition easier. But if that doesn’t happen, within a few months we’ll change ocelotgui to implement all the methods that I’ve described. So if you want to see how it’s done in detail, with an actual working application, just watch the code related to mysql.proc until it changes. It’s on github.

UPDATE (September 2018) our latest release 1.0.7 can be used for debugging MySQL 8.0 routines.

The First Development Milestone for MySQL 8.0

MySQL 8.0.0 exists.

For general impressions we already have comments by Giuseppe Maxia and Stewart Smith and Serdar Yegulalp.

Two new features looked important to me: modern UCA collations, and roles. So I downloaded and tried them out.

Modern UCA collations

MySQL is going to switch to utf8mb4 for the default character set and add collations based on the the latest version of the Unicode Collation Algorithm (UCA 9.0.0). I still see messages indicating the default is still latin1, but they’re incorrect, I can put 4-byte UTF-8 characters in columns that I created without explicitly saying utf8mb4.

The new collations are only for utf8mb4. That’s suboptimal. People still have good reasons to use other character sets (I discussed some of them in an earlier blog post). And in any case, a collation that works for utf8mb4’s repertoire will work for every character set that has a pure subset of that repertoire, which is to say, every character set.

The new collations are only for the generic “Default Unicode Collation Element Table” (DUCET), and for Latin-based alphabets. So there are no updates for “persian” or “sinhala”.

For an example, the following table shows changes between the old Swedish collation (utf8mb4_swedish_ci) and the new one (utf8mb4_sv_0900_ai_ci). The “Rule” column has what Unicode says about certain Swedish primary (level-1) comparisons, the “Example” column has what an SQL comparison would look like, the “Old” column has the results I got with utf8mb4_swedish_ci, the “New” column has the results I got with utf8mb4_sv_0900_ai_ci.

Rule Example Old New

—————————- ——— —– —-

ETH = D ‘Ð’ = ‘D’ FALSE TRUE

D STROKE = D ‘Đ’ = ‘D’ FALSE TRUE

THORN = TH ‘Þ’ = ‘TH’ FALSE TRUE

O DOUBLE ACUTE = O DIAERESIS ‘Ő’ = ‘Ö’ FALSE TRUE

U DOUBLE ACUTE = Y ‘Ű’ = ‘Y’ FALSE TRUE

L STROKE = L ‘Ł’ = ‘L’ FALSE TRUE

A DIAERESIS = E OGONEK ‘Ä’ = ‘Ę’ FALSE TRUE

OE = O DIAERESIS ‘Œ’ = ‘Ö’ FALSE TRUE

O CIRCUMFLEX = O DIAERESIS ‘Ô’ = ‘Ö’ FALSE TRUE

Most Swedes don’t know about these rules, they apply to medieval texts or foreign names. But most Swedes do know that rules should cover the edge cases, not just the Stockholm phone book. Because it follows the Unicode rules, the new collation is better.

But the new collation’s name is worse, for two reasons.

(1) The “_ai” suffix, meaning “accent insensitive”, is Microsoftish. There is such a thing, but the definition of “accent” varies between languages and the factors that influence collations can be other things besides accents. Clearer suffixes for extra-sensitive collation names would be “w2” or “l2” (for weight=2 or level=2), and they’re for sorting rather than searching unless you’re Japanese, but a default = no-suffix-for-accents would have been okay.

(2) The “_sv” suffix, meaning “Swedish”, is an unnecessary change. Compatibility with the previous suffix — “swedish” — would not have violated UCA specifications and would have been clearer for people who have used MySQL before.

For a second example, I looked at the new “Latin” collation, utf8mb4_la_0900_ai_ci. This time I couldn’t find any rules file in the Unicode standard directory. There is a UCA chart for Latin but utf8mb4_la_0900_ai_ci obviously isn’t following it at all. Instead it’s like MySQL’s old and silly “Roman” collation, where i=j and u=v. This is not an important collation. But MySQL claims the new collations follow UCA rules, and here is one that doesn’t, so I worry about the others.

This has to be taken in context — MySQL has far better support for character sets and collations than any other open-source DBMS, except sometimes MariaDB. And now it’s a weenie bit more far better. Observations about paucity of new UCA collations, bad names, or standard non-compliance won’t change that fact.

Roles

I discussed MariaDB’s roles in 2014. MySQL’s roles are already in the 8.0 documentation. Is MySQL making an improvement?

The first thing I noticed is that the syntax rules for roles are, too often, the same as the syntax rules for users. This is especially obvious when I ask for things that make no sense for roles, for example:

mysql>CREATE ROLE 'r'@'host'; OK 0 rows affected (0.1 seconds) mysql>CREATE ROLE ''; Error 1396 (HY000) Operation CREATE USER failed for anonymous user mysql>GRANT 'root'@'localhost' TO role_name; OK 0 rows affected (0.1 seconds) mysql>DROP USER role_name; OK 0 rows affected (0.1 seconds)

Because of this, some non-standard limitations exist: maximum name length is 32, names are case sensitive, role names cannot be the same as user names, and there is no separate information_schema table

However, the DML statements that I tested for MariaDB do work with MySQL as well, and are often exactly the same:

MariaDB: CREATE [OR REPLACE] ROLE [IF NOT EXISTS] role_name [WITH ADMIN ...];

MySQL: CREATE ROLE [IF NOT EXISTS] role_name [,role_name...];

MariaDB: DROP ROLE [IF EXISTS] role_name [,role_name...];

MySQL: DROP ROLE [IF EXISTS] role_name [,role_name...];

MariaDB: SET DEFAULT ROLE {role_name|NONE} [FOR user_name];

MySQL: SET DEFAULT ROLE ALL TO user_name [,user_name...];

MariaDB: SET ROLE {role_name|NONE};

MySQL: SET ROLE {role_name|NONE};

MariaDB: SELECT CURRENT_ROLE() | CURRENT_ROLE;

MySQL: SELECT CURRENT_ROLE();

MariaDB: [no exact equivalent]

MySQL: GRANT CREATE ROLE ON *.* TO grantee;

MariaDB: SHOW GRANTS [FOR role_name];

MySQL: SHOW GRANTS [FOR role_name];

MySQL: SHOW GRANTS [FOR user_name USING role_name[,role_name...]];

MariaDB: GRANT role_name TO grantee [,grantee...] [WITH ADMIN OPTION];

MySQL: GRANT role_name[,role_name...] TO grantee [,grantee...];

(The last GRANT example surprised me. MariaDB has trouble granting multiple roles in one statement, it’s Bug#5772. MySQL appears to be “solving” it by making certain role names illegal unless they’re delimited; I’m not sure that’s the right way to solve it.)

Circular roles (GRANT r1 TO r2; GRANT r2 TO r1;) are allowed but I expect they’ll be disallowed in a later version.

Example:

/* as a user with lots of privileges */ CREATE USER 'u'@'localhost'; CREATE ROLE r; CREATE TABLE t1 (s1 INT); CREATE TABLE t2 (s1 INT); GRANT SELECT ON t1 TO r; GRANT r TO 'u'@'localhost'; /* as user 'u'@'localhost' */ SET ROLE r; SELECT * FROM t1; SELECT * FROM t2; /* The first SELECT succeeds, the second SELECT fails. */

To generalize: so far MySQL 8.0.0 allows creation of roles but they have to look like users. So the syntax is undesirable, but they work properly.

Again, remember the context. There’s nothing wrong with a feature that’s not ready, until MySQL declares that it’s ready.

Typos

MySQL’s announcement, buried in a section about minor fixes, says “Foreign key names as stored in the foreign_keys and foreign_key_column_usage tables are a maximum of 64 characters, per the SQL standard”. Er, up to a point. The SQL standard says “In a regular identifier, the number of identifier parts shall be less than 128.”

Us Too

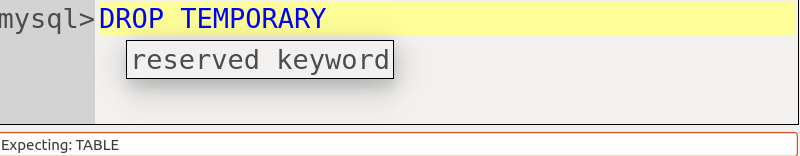

We have a new-version announcement too. Version 1.0.3 of the Ocelot Graphical User Interface (ocelotgui) for MySQL and MariaDB came out on Tuesday September 27 2016. Some new items are …

As well as getting result sets in the result-set widget, one can get them added to the history widget, with the same format as what the mysql client outputs.

As well as predicting what the next word should be, Ocelot’s syntax recognizer makes it possible to show hints if the user hovers over a word.

Finally, there is a rudimentary formatter. Clicking the edit menu item Edit|Format will change indentation, make keywords upper case, etc. I say “rudimentary” because, without a standard to follow, one must depend on taste, and nobody shares the taste that’s on display here.

Documentation is now on ocelot.ca/index.htm. C++ source and Linux-ready packages are on github.com/ocelot-inc/ocelotgui.

Standard SQL/JSON and MySQL 5.7 JSON

Support for storing and querying JSON within SQL is progressing for the ANSI/ISO SQL Standard, and for MySQL 5.7. I’ll look at what’s new, and do some comparisons.

The big picture

The standard document says

The SQL/JSON path language is a query language used by certain SQL operators (JSON_VALUE, JSON_QUERY, JSON_TABLE, and JSON_EXISTS, collectively known as the SQL/JSON query operators) to query JSON text.The SQL/JSON path language is not, strictly speaking, SQL, though it is embedded in these operators within SQL. Lexically and syntactically, the SQL/JSON path language adopts many features of ECMAScript, though it is neither a subset nor a superset of ECMAScript.The semantics of the SQL/JSON path language are primarily SQL semantics.

Here is a chart that shows the JSON-related data types and functions in the standard, and whether a particular DBMS has something with the same name and a similar functionality.

Standard Oracle SQL Server MySQL -------- ------ ---------- ----- Conventional data type YES YES NO JSON_VALUE function YES YES NO JSON_EXISTS function YES NO NO JSON_QUERY function YES YES NO JSON_TABLE function YES NO NO

My source re the standard is a draft copy of ISO/IEC 9075-2 SQL/Foundation. For Oracle 12c read Oracle’s whitepaper. For SQL Server 2016 read MSDN’s blog. My source re MySQL 5.7 is the MySQL manual and the latest source-code download of version 5.7.9.

Now, what is the significance of the lines in the chart?

Conventional data type

By “conventional”, I mean that in standard SQL JSON strings should be stored in one of the old familiar data types: VARCHAR, CLOB, etc. It didn’t have to be this way, and any DBMS that supports user-defined types can let users be more specific, but that’s what Oracle and Micosoft accept.

MySQL 5.7, on the other hand, has decided that JSON shall be a new data type. It’s closely related to LONGTEXT: if you say

CREATE TABLE j1 AS SELECT UPPER(CAST('{}' AS JSON));

SHOW CREATE TABLE j1;

then you get LONGTEXT. But if you use the C API to ask the data type, you get MYSQL_TYPE_JSON=245 (aside: this is not documented). And it differs because, if you try to put in non-JSON data, you get an error message.

At least, that’s the theory. It didn’t take me long to find a way to put non-JSON data in:

CREATE TABLE j2a (s1 INT, s2 JSON);

INSERT INTO j2a VALUES (1,'{"a": "VALID STUFF"}');

CREATE TABLE j2b AS SELECT s1, UPPER(s2) AS s2 FROM j2a;

INSERT INTO j2b VALUES (NULL, 'INVALID STUFF');

ALTER TABLE j2b MODIFY COLUMN s2 JSON;

… That unfortunately works, and now if I say “SELECT * FROM j2b;” I get an error message “The JSON binary value contains invalid data”. Probably bugs like this will disappear soon, though.

By making a new data type, MySQL has thrown away some of the advantages that come with VARCHAR or TEXT. One cannot specify a maximum size — everything is like LONGTEXT. One cannot specify a preferred character set and collation — everything is utf8mb4 and utf8mb4_bin. One cannot take advantage of all the string functions — BIN() gives meaningless results, for example. And the advantage of automatic validity checking could have been delivered with efficient constraints or triggers instead. So why have a new data type?

Well, PostgreSQL has a JSON data type. As I’ve noticed before, PostgreSQL can be a poor model if one wants to follow the standard. And it will not surprise me if the MariaDB folks also decide to make a JSON data type, because I know that they are following similar logic for an “IP address” data type.

By the way, the validity checking is fairly strict. For example, ‘{x:3}’ is considered invalid because quote marks are missing, and ‘{“x”:.2} is considered invalid because the value has no leading digit.

JSON_VALUE function

For an illustration and example it’s enough to describe the standard’s JSON_VALUE and MySQL’s JSON_EXTRACT.

The standard idea is: pass a JSON string and a JavaScript-like expression, get back an SQL value, which will generally be a scalar value. For example,

SELECT JSON_VALUE(@json, @path_string) FROM t; SELECT JSON_VALUE(json_column_name, 'lax $.c') AS c FROM t;

There are optional clauses for deciding what to do if the JSON string is invalid, or contains missing and null components. Again, the standard’s JSON_VALUE is what Oracle and Microsoft accept. There’s some similarity to what has gone before with SQL/XML.

MySQL, on the other hand, accomplishes some similar things with JSON_EXTRACT. For example,

SELECT JSON_EXTRACT(@json, @path_string); SELECT JSON_VALUE(json_column_name, '$.c') AS c FROM t;

And the result is not an ordinary MySQL scalar, it has type = JSON. In the words of physicist I.I.Rabi when confronted with a new particle, “Who ordered that?”

Well, JSON_EXTRACT and some of the other MySQL functions have fairly close analogues, in both name and functionality, with Google’s BigQuery and with SQLite. In other words, instead of the SQL standard, MySQL has ended up with something like the NoSQL No-standard.

I should stress here that MySQL is not “violating” the SQL standard with JSON_EXTRACT. It is always okay to use non-standard syntax. What’s not okay is to use standard syntax for a non-standard purpose. And here’s where I bring in the slightly dubious case of the “->” operator. In standard SQL “->”, which is called the “right arrow” operator, has only one purpose: dereferencing. In MySQL “->” has a different purpose: a shorthand for JSON_EXTRACT. Since MySQL will never support dereferencing, there will never be a conflict in practice. Nevertheless, technically, it’s a violation.

Observed Behaviour

When I tried out the JSON data type with MySQL 5.7.9, I ran into no exciting bugs, but a few features.

Consistency doesn’t apply for INSERT IGNORE and UPDATE IGNORE. For example:

CREATE TABLE t1 (date DATE, json JSON);

INSERT IGNORE INTO t1 (date) VALUES ('invalid date');

INSERT IGNORE INTO t1 (json) VALUES ('{invalid json}');

The INSERT IGNORE into the date column inserts null with a warning, the INSERT IGNORE into the json column returns an error.

Some error messages might still need adjustment. For example:

CREATE TABLE ti (id INT, json JSON) PARTITION BY HASH(json);

Result: an error message = “A BLOB field is not allowed in partition function”.

Comparisons of JSON_EXTRACT results don’t work. For example:

SET @json = '{"a":"A","b":"B"}';

SELECT GREATEST(

JSON_EXTRACT(@json,'$.a'),

JSON_EXTRACT(@json,'$.b'));

The result is a warning “This version of MySQL doesn’t yet support ‘comparison of JSON in the LEAST and GREATEST operators'”, which is a symptom of the true problem, that JSON_EXTRACT returns a JSON value instead of a string value. The workaround is:

SET @json = '{"a":"A","b":"B"}';

SELECT GREATEST(

CAST(JSON_EXTRACT(@json,'$.a') AS CHAR),

CAST(JSON_EXTRACT(@json,'$.b') AS CHAR));

… which returns “B” — a three-character string, including the quote marks.

Not The End

The standard might change, and MySQL certainly will change anything that’s deemed wrong. Speaking of wrong, I might have erred too. And I certainly didn’t give justice to all the other details of MySQL 5.7 JSON.

Meanwhile

The Ocelot GUI client for MySQL and MariaDB is still version 0.8 alpha, but since the last report there have been bug fixes and improvements to the Help option. Have a look at the new manual by going to https://github.com/ocelot-inc/ocelotgui and scrolling down till you see the screenshots and the words “User Manual”.

Decrypt .mylogin.cnf

General-purpose MySQL applications should read MySQL option files like /etc/my.cnf, ~/.my.cnf, … and ~/.mylogin.cnf. But ~/.mylogin.cnf is encrypted. That’s a problem for our ocelotgui GUI application, and I suppose other writers of Linux applications could face the same problem, so I’ll share the code we’ll use to solve it.

First some words of defence. I think that encryption (or more correctly obfuscation) is okay as an option: a customer asked for it, and it prevents the most casual snoopers — rather like a low fence: anyone can get over it, but making it a bit troublesome will make most passersby pass by. I favoured the idea, though other MySQL employees were against it on the old “false sense of security” argument. After all, by design, the data must be accessible without requiring credentials. So just XORing the file contents with a fixed key would have done the job.

Alas, the current implementation does more: the configuration editor not only XORs, it encrypts with AES 128-bit ecb. The Oxford-dictionary word for this is supererogation. This makes reading harder. I’ve seen only one bug report / feature request touching on the problem, but I’ve also seen that others have looked into it and provided some solutions. Kolbe Kegel showed how to display the passwords, Serge Frezefond used a different method to display the whole file. Great. However, their solutions require downloading MySQL source code and rebuilding a section. No good for us, because ocelotgui contains no MySQL code and doesn’t statically link to it. We need code that accesses a dynamic library at runtime, and unless I missed something big, the necessary stuff isn’t exported from the mysql client library.

Which brings us to … ta-daa … readmylogin.c. This program will read a .mylogin.cnf file and display the contents. Most of it is a BSD licence, so skip to the end to see the twenty lines of code. Requirements are gcc, and libcrypto.so (the openSSL library which I believe is easily downloadable on most Linux distros). Instructions for building and running are in the comments. Cutters-and-pasters should beware that less-than-sign or greater-than-sign may be represented with HTML entities.

/*

readmylogin.c Decrypt and display a MySQL .mylogin.cnf file.

Uses openSSL libcrypto.so library. Does not use a MySQL library.

Copyright (c) 2015 by Ocelot Computer Services Inc.

All rights reserved.

Redistribution and use in source and binary forms, with or without

modification, are permitted provided that the following conditions are met:

* Redistributions of source code must retain the above copyright

notice, this list of conditions and the following disclaimer.

* Redistributions in binary form must reproduce the above copyright

notice, this list of conditions and the following disclaimer in the

documentation and/or other materials provided with the distribution.

* Neither the name of the nor the

names of its contributors may be used to endorse or promote products

derived from this software without specific prior written permission.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND

ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED

WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

DISCLAIMED. IN NO EVENT SHALL BE LIABLE FOR ANY

DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES

(INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES;

LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND

ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

(INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS

SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

To compile and link and run with Linux and gcc:

1. Install openSSL

2. If installation puts libcrypto.so in an unusual directory, say

export LD_LIBRARY_PATH=/unusual-directory

3. gcc -o readmylogin readmylogin.c -lcrypto

To run, it's compulsory to specify where the file is, for example:

./readmylogin .mylogin.cnf

MySQL may change file formats without notice, but the following is

true for files produced by mysql_config_editor with MySQL 5.6:

* First four bytes are unused, probably reserved for version number

* Next twenty bytes are the basis of the key, to be XORed in a loop

until a sixteen-byte key is produced.

* The rest of the file is, repeated as necessary:

four bytes = length of following cipher chunk, little-endian

n bytes = cipher chunk

* Encryption is AES 128-bit ecb.

* Chunk lengths are always a multiple of 16 bytes (128 bits).

Therefore there may be padding. We assume that any trailing byte

containing a value less than '\n' is a padding byte.

To make the code easy to understand, all error handling code is

reduced to "return -1;" and buffers are fixed-size.

To make the code easy to build, the line

#include "/usr/include/openssl/aes.h"

is commented out, but can be uncommented if aes.h is available.

This is version 1, May 21 2015.

More up-to-date versions of this program may be available

within the ocelotgui project https://github.com/ocelot-inc/ocelotgui

*/

#include <stdio.h>

#include <fcntl.h>

//#include "/usr/include/openssl/aes.h"

#ifndef HEADER_AES_H

#define AES_BLOCK_SIZE 16

typedef struct aes_key_st { unsigned char x[244]; } AES_KEY;

#endif

unsigned char cipher_chunk[4096], output_buffer[65536];

int fd, cipher_chunk_length, output_length= 0, i;

char key_in_file[20];

char key_after_xor[AES_BLOCK_SIZE] = {0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0};

AES_KEY key_for_aes;

int main(int argc, char *argv[])

{

if (argc < 1) return -1;

if ((fd= open(argv[1], O_RDONLY)) == -1) return -1;

if (lseek(fd, 4, SEEK_SET) == -1) return -1;

if (read(fd, key_in_file, 20) != 20) return -1;

for (i= 0; i < 20; ++i) *(key_after_xor + (i%16))^= *(key_in_file + i);

AES_set_decrypt_key(key_after_xor, 128, &key_for_aes);

while (read(fd, &cipher_chunk_length, 4) == 4)

{

if (cipher_chunk_length > sizeof(cipher_chunk)) return -1;

if (read(fd, cipher_chunk, cipher_chunk_length) != cipher_chunk_length) return -1;

for (i= 0; i < cipher_chunk_length; i+= AES_BLOCK_SIZE)

{

AES_decrypt(cipher_chunk+i, output_buffer+output_length, &key_for_aes);

output_length+= AES_BLOCK_SIZE;

while (*(output_buffer+(output_length-1)) < '\n') --output_length;

}

}

*(output_buffer + output_length)= '\0';

printf("%s.\n", output_buffer);

}

MySQL 5.7 supports the GB18030 Chinese Character Set

My former boss at MySQL sent out a notice that MySQL 5.7.4 now supports the GB18030 character set, thus responding to requests that have been appearing since 2005. This is a big deal because the Chinese government demands GB18030 support, and because the older simplified-Chinese character sets (gbk and gb2312) have a much smaller repertoire (that is, they have too few characters). And this is real GB18030 support — I can define columns and variables with CHARACTER SET GB18030. That’s rare — Oracle 12c and SQL Server 2012 and PostsgreSQL 9.3 can’t do it. (They allow input from GB18030 clients but they convert it immediately to Unicode.) Among big-time DBMSs, until now, only DB2 has treated GB18030 as a first-class character set.

Standard Adherence

We’re talking about the current version of the standard, GB18030-2005 “IT Chinese coded character set”, especially its description of 70,244 Chinese characters. I couldn’t puzzle out the Chinese wording in the official document, all I could do was use translate.google.com on some excerpts. But I’ve been told that the MySQL person who coded this feature is Chinese, so they’ll have had better luck. What I could understand was what are the difficult characters, what are the requirements for a claim of support, and what the encoding should look like. From the coder’s comments, it’s clear that part was understood. I did not check whether there was adherence for non-mandatory parts, such as Tibetan script.

Conversions

The repertoire of GB18030 ought to be the same as the Unicode repertoire. So I took a list of every Unicode character, converted to GB18030, and converted back to Unicode. The result in every case was the same Unicode character that I’d started with. That’s called “perfect round tripping”. As I explained in an earlier blog post “The UTF-8 World Is Not Enough”, storing Chinese characters with a Chinese character set has certain advantages. Well, now the biggest disadvantage has disappeared.

Hold on — how is perfect round tripping possible, given that MySQL frequently refers to Unicode 4.0, and some of the characters in GB18030-2005 are only in Unicode 4.1? Certainly that ought to be a problem according to the Unicode FAQ and this extract from Ken Lunde’s book. But it turns out to be okay because MySQL doesn’t actually disallow those characters — it accepts encodings which are not assigned to characters. Of course I believe that MySQL should have upgraded the Unicode support first, and added GB18030 support later. But the best must not be an enemy of the good.

Also the conversions to and from gb2312 work fine, so I expect that eventually gb2312 will become obsolete. It’s time for mainland Chinese users to consider switching over to gb18030 once MySQL 5.7 is GA.

Collations

The new character set comes with three collations: one trivial, one tremendous, one tsk, tsk.

The trivial collation is gb18030_bin. As always the bin stands for binary. I expect that as always this will be the most performant collation, and the only one that guarantees that no two characters will ever have the same weight.

The tremendous collation is gb18030_unicode_520_ci. The “unicode_520” part of the name really does mean that the collation table comes from “Unicode 5.2” and this is the first time that MySQL has taken to heart the maxim: what applies to the superset can apply to the subset. In fact all MySQL character sets should have Unicode collations, because all their characters are in Unicode. So to test this, I went through all the Unicode characters and their GB18030 equivalents, and compared their weights with WEIGHT_STRING:

WEIGHT_STRING(utf32_char COLLATE utf32_unicode_520_ci) to

WEIGHT_STRING(gb18030_char COLLATE gb18030_unicode_520_ci).

Every utf32 weight was exactly the same as the gb18030 weight.

The tsk, tsk collation is gb18030_chinese_ci.

The first bad thing is the suffix chinese_ci, which will make some people think that this collation is like gb2312_chinese_ci. (Such confusion has happened before for the general_ci suffix.) In fact there are thousands of differences between gb2312_chinese_ci and gb18030_chinese_ci. Here’s an example.

mysql> CREATE TABLE t5

-> (gb2312 CHAR CHARACTER SET gb2312 COLLATE gb2312_chinese_ci,

-> gb18030 CHAR CHARACTER SET gb18030 COLLATE gb18030_chinese_ci);

Query OK, 0 rows affected (0.22 sec)

mysql> INSERT INTO t5 VALUES ('[','['),(']',']');

Query OK, 2 rows affected (0.01 sec)

Records: 2 Duplicates: 0 Warnings: 0

mysql> SELECT DISTINCT gb2312 from t5 ORDER BY gb2312;

+--------+

| gb2312 |

+--------+

| ] |

| [ |

+--------+

2 rows in set (0.00 sec)

mysql> SELECT DISTINCT gb18030 from t5 ORDER BY gb18030;

+---------+

| gb18030 |

+---------+

| [ |

| ] |

+---------+

2 rows in set (0.00 sec)

See the difference? The gb18030 order is obviously better — ‘]’ should be greater than ‘[‘ — but when two collations are wildly different they shouldn’t both be called “chinese_ci”.

The second bad thing is the algorithm. The new chinese_ci collation is based on pinyin for Chinese characters, and binary comparisons of the UPPER() values for non-Chinese characters. This is pretty well useless for non-Chinese. I can bet that somebody will observe “well, duh, it’s a Chinese character set” — but I can’t see why one would use an algorithm for Latin/Greek/Cyrillic/etc. characters that’s so poor. There’s a Common Locale Data Repository for tailoring for Chinese, there are MySQL worklog tasks that explain the brave new world, there’s no need to invent an idiolect when there’s a received dialect.

Documentation

The documentation isn’t up to date yet — there’s no attempt to explain what the new character set and its collations are about, and no mention at all in the FAQ.

But the worklog task WL#4024: gb18030 Chinese character set gives a rough idea of what the coder had in mind before starting. It looks as if WL#4024 was partly copied from http://icu-project.org/docs/papers/unicode-gb18030-faq.html so that’s also worth a look.

For developers who just need to know what’s going on now, just re-read this blog post. What I’ve described should be enough for people who care about Chinese.

I didn’t look for bugs with full-text or LIKE searches, and I didn’t look at speed. But I did look hard for problems with the essentials, and found none. Congratulations are due.

Update on July 17 2014: A source at MariaDB tells me that they’ve worked on GB18030 too. And the MySQL folks have closed a GB18030 bug that ‘Y’ = tilde” in the Chinese collation — not realizing that it’s a duplicate of a bug that I reported seven years ago.

MySQL versus Firebird

Firebird is an open-source DBMS with a long history and good SQL support. Although I measured Firebird’s importance as smaller than MySQL’s or MariaDB’s, it exists, and might grow a bit when Firebird becomes the default LibreOffice DBMS.

I decided to compare the current versions of MySQL 5.6 and Firebird SQL 2.5. I only looked at features that end users can see, without benchmarking. Preparing for the comparison was easy. I looked at the instructions for downloading Firebird with Ubuntu and within 15 minutes I was entering SQL statements. The documentation is disorganized but when in doubt I could guess what to do, since the Firebird folks care about standards.

I’ll organize the discussion according to the ANSI/ISO feature list. I’ll skip the features which both products support equally, which means I’ll skip the great majority, because both products are very similar. Then: For every feature where MySQL is better, MySQL gets a point. For every feature where Firebird is better, Firebird gets a point. The only way to be objective is to be arbitrary.

Mandatory Features |

|||

|---|---|---|---|

| Feature | Firebird Point | MySQL Point | Explanation |

| E011-03 DECIMAL and NUMERIC types | 1 | Firebird maximum number of digits = 18. MySQL maximum = 65. | |

| E021-02 CHARACTER VARYING data type | 1 | Firebird maximum VARCHAR length = 32767. MySQL maximum = 65535. | |

| E021-07 Character concatenation | 1 | Firebird concatenation operator is ||. MySQL has CONCAT() by default. | |

| E031-01 Delimited identifiers | 1 | Firebird delimiter “” means case-sensitive. MySQL has no viable equivalent. | |

| E041-04 Basic foreign key | 1 | In Firebird one can use syntax variants that MySQL/InnoDB ignores. | |

| E101-03 Searched UPDATE statement | 1 | Firebird has fixed a small flaw. MySQL still has the flaw. | |

| E141-08 CHECK clause | 1 | MySQL doesn’t have this. | |

| F031-03 GRANT statement | 1 | 1 | Only Firebird can grant to PUBLIC. But only MySQL can grant TRIGGER. One point each. |

| F051-03 TIMESTAMP data type | 1 | Firebird can have 4 digits for seconds precision, MySQL can have 6. | |

| T321-01 User-defined SQL functions | 1 | Firebird only has procedures, and they’re PLSQL syntax. MySQL has standard syntax. | |

| 9075-11 information_schema | 1 | Firebird doesn’t have this. | |

Optional Features |

|||

|---|---|---|---|

| Feature | Firebird Point | MySQL Point | Explanation |

| F121-01 GET DIAGNOSTICS | 1 | Firebird doesn’t have this. | |

| F200 TRUNCATE | 1 | Firebird doesn’t have this. | |

| F201 CAST function | 1 | In Firebird I can cast to (among other data types) VARCHAR, SMALLINT, INT, BIGINT, NUMERIC, TIMESTAMP. In MySQL, I can’t. | |

| F221 Explicit defaults | 1 | Both products allow DEFAULT in CREATE statements, but only MySQL allows DEFAULT in UPDATE or INSERT statements. | |

| F251 Domain support | 1 | MySQL doesn’t have this. | |

| F312 Merge statement | 1 | MySQL doesn’t have a MERGE statement, although it does have near equivalents. | |

| F391 Long identifiers | 1 | Firebird maximum length = 31. MySQL maximum length = 64. Both are sub-standard. | |

| F401-02 Full outer join | 1 | MySQL doesn’t have this. | |

| F461 Named character sets | 1 | See Note #1. | |

| F531 Temporary tables | 1 | Firebird allows CREATE GLOBAL TEMPORARY. MySQL has temporary tables in a non-standard way. | |

| F690 Collation support | 1 | See Note #2. | |

| T021 BINARY and VARBINARY data types | 1 | Firebird doesn’t have this, although it does allow specifying a character set as OCTETS,which is comparable to MySQL’s “binary”. | |

| T121 WITH (excluding RECURSIVE) in query | 1 | Firebird recently added WITH support. MySQL doesn’t have it. Incidental note: misinformation has been spread about MySQL’s history for this feature, for example the first comment on this blog post. The fact is that in 2004 MySQL decided to support CONNECT BY, not WITH. Then it didn’t implement it. | |

| T131 Recursive query | 1 | MySQL doesn’t have this. | |

| T141 SIMILAR predicate | 1 | MySQL doesn’t have this. | |

| T171 LIKE clause in table definition | 1 | Firebird doesn’t have this. | |

| T172 AS clause in table definition | 1 | Firebird doesn’t have this. | T176 Sequence generator support | 1 | Firebird only supports a “CREATE SEQUENCE sequence-name” statement, but that’s enough for a point. |

| T211 Basic trigger capability | See the table in an earlier post but MySQL has more standard CREATE TRIGGER syntax. Can’t decide. | ||

| T281 SELECT privilege with column granularity | 1 | Firebird doesn’t have this. | |

| T331 Basic roles | 1 | Firebird has 50% support. MySQL has nothing. MariaDB roles are beta. | |

Note #1: Re character sets: Ignoring duplicates, alias names, and slight variations: Firebird multi-byte character sets are gbk, sjis, utf-8, big5, gb2312, eucjp, ksc 5601, gb18030. MySQL multi-byte character sets are gbk, sjis, utf-8, big5, gb2312, eucjp, euckr, utf-16, utf-32. As I put it in an earlier blog post, “The UTF-8 world is not enough”. MySQL has more, so it gets the point.

Note #2: Re collations: Although Firebird deserves special mention for supporting a slightly later version of the Unicode Collation Algorithm, the only multi-byte character set for which Firebird has many collations is UTF-8. Firebird has only four collations for UTF-8: ucs_basic (code point order), and unicode / unicode_ci / unicode_ci_ai (variations of UCA order with and without case sensitivity or accent sensitivity). MySQL has additionally: Croatian, Czech, Danish, Estonian, German, Hungarian, Iceland, Latvian, Lithuanian, Persian, Polish, Romanian, Sinhala, Slovak, Slovenian, Spanish, Swedish, Turkish, Vietnamese. Incidental side note: guess whom the Firebird manual points to for extra documentation about its character sets? None other than the guy who coded MySQL’s collations, a certain A. Barkov, formerly of MySQL, now of MariaDB.

Total points for Firebird: 16. Total points for MySQL: 16.

Update: in an earlier edition of this post, I broke the tie by giving Firebird a delay-of-game penalty on the basis that the makers started talking about version 3.0 in 2011, and version 3.0 is still alpha, and I concluded “MySQL wins by a score of 16 to 16”. That wasn’t serious. The fair way to break the tie is to decide which features should have more weight.

Deprecating MySQL Features

If you haven’t read the Wikipedia article on deprecation, you might not know it’s from the Latin word deprecare, meaning “to ward off (a disaster) by prayer.” That pretty well describes the quandary for any software product that has a big established user base. Is it better to keep junk features, in case removing them will cause disasters for the old users? Or is it better to remove junk features, to avoid ridicule and to make the manual slimmer for the new users?

Historically MySQL has deprecated with caution, and usually MySQL has followed these steps:

(1) In version X, for any SQL statement that appears to use the feature, return a warning that the feature “has been deprecated and may be removed in a future release”.

(2) Also in version X, put a notification in the manual. Notifications have a regular pattern so they’re easy to find by looking in the “What’s new” section, such as http://dev.mysql.com/doc/relnotes/mysql/5.7/en/news-5-7-4.html, and http://dev.mysql.com/doc/relnotes/mysql/5.7/en/news-5-7-4.html.

Alternatively, one can look for the “nutshell” pages, such as https://dev.mysql.com/doc/refman/5.6/en/mysql-nutshell.html and https://dev.mysql.com/doc/refman/5.5/en/mysql-nutshell.html. Or, in googlespeak, site:dev.mysql.com/doc “what’s new” “deprecated”. Notice that I am pointing only to the MySQL manual, because MariaDB does not appear to have an equivalent (the MariaDB “deprecation policy” page only talks about supported platforms).

(3) In version X+1 or X+2, actually remove the feature and start throwing syntax errors.

My all-time favourite deprecation was of the TIMESTAMP(M) data type before version 4.1. The M stood for “display width”, for example, with TIMESTAMP(6) you’d only see the first few digits. We realized that was in conflict with the ANSI/ISO standard, so it became deprecated in 4.x, became a syntax error in 5.x, and then — years after the original warning — the syntax became legal again with a different meaning, so now the 6 in TIMESTAMP(6) means “fractional seconds”. Because there was a period when the syntax was simply illegal, it was practically impossible to move an old application to the new format without noticing anything.

These are my nominations for favourite deprecations in 5.6 and 5.7.

SHOW AUTHORS.

SHOW AUTHORS was an “Easter egg”. Naturally I reported it as such at the time because Easter eggs are more than bugs, they are vandalism. Eventually the feature got documented, but the smell of rotten eggs was in my nose for years afterward, so I’m relieved it’s gone.

INFORMATION_SCHEMA.PROFILING

Faithful readers of my blog posts will of course recall me saying in 2008

A bit of a caveat: I was totally against adding this feature, and some of my bias might show. But I’m not trying to argue about it. I do believe that once we add a feature we should explain it, and all I’m trying to do is explain here.

… which had nothing to do with the quality of the user contribution. People who know me as the original crusader for PERFORMANCE_SCHEMA might guess that I saw this feature as out of step.

INSERT DELAYED

INSERT DELAYED wasn’t junk per se. The problem with little non-standard performance-enhancing niblets is that they’re always non-orthogonal. People sense that. So the bug report / feature request for — why not? — “UPDATE DELAYED” got about 15 votes. Adding the EVENTS feature didn’t quell the protesters, because any rational person could see that, if INSERT DELAYED was okay syntax, UPDATED DELAYED should also be. (This makes two posts in a row where I’m railing about lack of orthogonality — I brought it up in my blog post last week about MariaDB’s DELETE … RETURNING feature. By the way, I updated that post to show what decent standard-fearing folk would do instead of copying PostgreSQL.) So, to stop the protests that there’s a precedent, get rid of the precedent. Well done.

What these deprecations are telling me is that somebody at MySQL at last has the authority and determination to clean the garage. The product is not just getting new good features, it’s losing old bad ones.

MySQL’s Date Support Is Not Absurd

MySQL has unfortunate rules for dates with two-digit years, for the date range, for post-decimal digits, for timestamp defaults … But other DBMSs can be equally bizarre

Two-digit years

Start with two-digit years. Summarizing from the manual:

If the two-digit value is <= 69, add 2000. Else add 1900. For example, if it's 65, then it's 2065.

It’s an arbitrary extra detail that users have to learn. But PostgreSQL has exactly the same rule. And three other DBMSs — DB2, Oracle, Firebird — have an even more complex rule, saying in effect that the interpretation will vary depending what year it is when you do the input. SQL Server is even worse, it allows users to change the rule slightly. Only the SQL standard has a rule that’s easy to learn and never changes: you have to enter four-digit years. Time for a modernized-English Shakespeare quote:

Hamlet: Why was he sent into England?

First Clown: Why, because he was mad. He shall recover his wits there, or, if he does not, it is no great matter there.

Hamlet: Why?

First Clown: It will not be seen in him there. There the men are as mad as he.

Applying the clown’s logic, we see that MySQL’s behaviour, in context, is no great matter.

Range

Different DBMSs have different minimum dates, though they mostly have the same maximum.

Minimum Maximum

Oracle 12c(timestamp) 4713-01-01 BC Julian 9999-12-31

SQL Server 2012 (datetime) 1753-01-01 AD Gregorian 9999-12-31

SQL Server 2012 (datetime2) 0001-01-01 AD Gregorian 9999-12-31

DB2 9 (timestamp) 0001-01-01 AD Gregorian 9999-12-31

Firebird 0100-01-01 AD 32768-02-29

PostgreSQL (timestamp) 4714-11-24 BC Gregorian 294276-12-31

MySQL (datetime) 1000-01-01 AD Gregorian 9999-12-31

At first glance it looks as if MySQL is out of step here — everybody else can store dates before 1000 AD. (SQL Server was out of step too, until Microsoft

introduced the datetime2 data type in 2008.) But actually MySQL can store dates before that. Just don’t try calculating on them, goes the recommendation. However, Oracle, and Oracle alone, switches to the Julian calendar for dates before October 1582. I think that’s becoming the conventional thing to do, even though (sob) it’s not standard SQL. Therefore, yes, MySQL is out of step, but so is everybody else except Oracle.

Post-decimal digits

MySQL version 5.6.4 saw the implementation of WL#946 TIME/TIMESTAMP/DATETIME with fractional seconds. Once again Oracle has hidden the task description but once again it’s visible on wayback (as always, click “High Level Architecture” to see what the real spec is). In this case the implementation does not match the original spec. MySQL only supports 6 post-decimal digitsr (microseconds), and the same is true for MariaDB.

How bad is that? Let’s compare:

Maximum Fraction

DB2 9.7 .999999999999

Oracle 12c .999999999

SQL Server 2012 .99999999

PostgreSQL 9.3 .999999

MySQL 5.6 .999999

Firebird .9999

I look at that comparison and exclaim “MySQL is behind the Big Three Closed-Source DBMSs”. But I acknowledge that others could look at the same comparison and shrug “Oh well, it’s as good as the other open-source DBMSs”. Merging the opinions, I’ll say: post-decimal digit support is not good but, since 5.6.4, not bizarre.

Yes, But

Yes, but what about the fact that, in MySQL, the date 0000-00-00 is legal but becomes NULL if you retrieve it via ODBC or if you assign NULL to a TIMESTAMP NOT NULL, the result is the current timestamp and other equally odd-looking rules?

Here I can’t defend MySQL on the basis that some other DBMS does it. These are MySQL innovations. And I have never heard somebody from outside MySQL say that they have been helpful in a serious project. I can only say, well, at least they’re avoidable.

The claim is just that MySQL date support is not bizarre, in relative terms. The other DBMSs have quirks too. It’s perhaps inevitable in this imperfect world which refuses to go around the sun in a regular and divisible-by-10 number of days.

MySQL, GROUP BY, Select lists, and Standard SQL

The MySQL manual says: “In standard SQL, a query that includes a GROUP BY clause cannot refer to nonaggregated columns in the select list that are not named in the GROUP BY clause.”

I got questioned about that last week, and this week I see that there’s a proposed change in behaviour for MySQL 5.7, so I guess it’s a good time to try to figure out what’s going on in this part of the world.

I checked the manuals for most current DBMSs and confirmed that the ONLY_FULL_GROUP_BY kind of behaviour is required by DB2, Derby, Firebird, HSQLDB, Informix, Mimer, Oracle, SQL Server, and Teradata. The only exceptions are Sybase and SQLite, and I’ll mention PostgreSQL later.

But returning an error is not really absolutely always the right thing.

Suppose your table was made this way:

CREATE TABLE t (a INT PRIMARY KEY, b INT);

And suppose you make this query:

SELECT a, b FROM t GROUP BY a;

This causes an error if sql_mode = ONLY_FULL_GROUP_BY.

But is it really an error?

The trick here is: if column a is the PRIMARY KEY (or a UNIQUE NOT NULL key), and you’re grouping by a, then it is logically impossible — impossible — that there could be two different values of column b.

One could say that “a determines b”, or that there is a “functional dependency” here. Roland Bouman talked about the matter in 2007 in an article “Debunking GROUP BY myths”. He made the point that, in fact, the current SQL standard does not really absolutely always require that there be an error if there’s a functional dependency.

So let’s look a bit more closely at what the standard says now. Sample:

2) Without Feature T301, “Functional dependencies”, in conforming SQL language, if QCR is a group-invariant column reference, then QCR shall be a reference to a grouping column of the qualifying query of QCR.

Arm-waving our way past the terminology, this kind of thing means that ONLY_FULL_GROUP_BY behaviour is a requirement — for any implementation that doesn’t claim to support Feature T301. Feature T301, eh? That’s not a core feature, in other words no SQL DBMS is required to support T301 in order to claim ANSI/ISO conformance. But it is standard. So one could argue that the MySQL manual is slightly wrong, it shouldn’t suggest that “the standard” requires ONLY_FULL_GROUP_BY. On the other hand, MySQL’s behaviour without ONLY_FULL_GROUP_BY is obviously non-standard, because there’s no checking whether something is “functionally dependent” or not.

What is to be done, then?

Well, it’s rather short notice to say that something will be in 5.7. There were occasional bitter experiences when incompatible behaviour was rushed into MySQL, and I thought the lessons were learned. I was wrong. I should have listened to Mr Kipling:

As it will be in the future, it was at the birth of Man

There are only four things certain since Social Progress began.

That the Dog returns to his Vomit and the Sow returns to her Mire,

And the burnt Fool’s bandaged finger goes wabbling back to the Fire.

Sure, but shouldn’t MySQL do something about that “functional dependency” stuff?

Well, PostgreSQL did. For the sort of item that I used in the example above, which by the way is called a “BPK-dependency” (base table primary key dependency), recent versions of PostgreSQL will say okay. As a result PostgreSQL now claims “partial” compliance with feature T301. But only partial!

The difficulty is that there are so many possibilities, as soon as you start to consider what should happen with views, left or right joins, subsets of multi-column keys, rollup, subqueries, and the many ways that a functional dependency can become known, besides the simple BPK case. Trudy Pelzer and I didn’t really get to grips with these arcana in our book “SQL-99 Complete, Really”, but Jim Melton and Alan Simon did in their book “SQL:1999 Understanding Relational Language Components”, for several dense pages. I’ll just quote the last sentences:

We realize that the material in this section is complex and abstract in the extreme. We have included it in spite of the complexity to serve readers who need to understand SQL:1999’s enhancements in updatability and who are sufficiently involved with data modeling to have or pursue the background in relational theory. However, in practical terms, we have rarely found ourselves using knowledge of all of these functional dependencies when writing our applications.

I think that it will be a long time before PostgreSQL will have full support, and this will cause confusion — people who understand the rationale will expect queries to work whenever they’re rational according to functional-dependency thoughts, and they won’t work. That doesn’t mean, though, that PostgreSQL is wrong — as I’ve said before, complaints about “half-baked features” are typically wrong. I see that the Derby folks are considering following the PostgreSQL lead. And maybe MySQL someday will too. There’s a worklog task, WL#2489, Fully compliant ONLY_FULL_GROUP_BY mode. Once again Oracle has hidden the task, once again it’s visible on wayback, but WL#2489 had no high-level architecture anyway. Well, that means the plan can be flexible.

And the bottom line is: MySQL should change, but no rush. Oracle should recall Saint Augustine’s prayer: “Lord, grant me chastity and continence, but not yet.” A variation could be: Lord, let me support standard SQL by default with Feature T301 … but not in 5.7.”