Category: MySQL / MariaDB

MySQL and MariaDB client .so libraries on Linux

The MySQL library is libmysqlclient.so, the MariaDB library is libmariadbclient.so or libmariadb.so. I’ll list some quirks that I think are relatively unknown but good to know. I’ll end with a pointer to a function that’s good to have.

mysql and mariadb clients don’t themselves use .so libraries

To illustrate, here’s the file mysql.dir/link.txt that I got when building MySQL’s source with -DWITHOUT_SERVER=ON.

/usr/bin/c++ -std=c++20 -fno-omit-frame-pointer -ftls-model=initial-exec -g -O2 -ffile-prefix-map=/home/pgulutzan/Downloads/mysql-9.2.0=. -flto=auto -ffat-lto-objects -flto=auto -ffat-lto-objects -fstack-protector-strong -Wformat -Werror=format-security -Wdate-time -D_FORTIFY_SOURCE=2 -Wall -Wextra -Wformat-security -Wvla -Wundef -Wmissing-format-attribute -Woverloaded-virtual -Wcast-qual -Wimplicit-fallthrough=5 -Wstringop-truncation -Wsuggest-override -Wmissing-include-dirs -Wextra-semi -Wlogical-op -ffunction-sections -fdata-sections -O2 -g -DNDEBUG -g1 -Wl,-Bsymbolic-functions -flto=auto -ffat-lto-objects -flto=auto -Wl,-z,relro -Wl,--build-id=sha1 CMakeFiles/mysql.dir/__/sql-common/net_ns.cc.o CMakeFiles/mysql.dir/completion_hash.cc.o CMakeFiles/mysql.dir/mysql.cc.o CMakeFiles/mysql.dir/pattern_matcher.cc.o CMakeFiles/mysql.dir/readline.cc.o CMakeFiles/mysql.dir/client_query_attributes.cc.o CMakeFiles/mysql.dir/multi_factor_passwordopt-vars.cc.o CMakeFiles/mysql.dir/multi_option.cc.o CMakeFiles/mysql.dir/common/user_registration.cc.o CMakeFiles/mysql.dir/__/sql-common/sql_string.cc.o -o ../runtime_output_directory/mysql ../archive_output_directory/libmysqlclient.a ../extra/libedit/libedit-20240808-3.1/src/libedit.a /usr/lib/x86_64-linux-gnu/libssl.so /usr/lib/x86_64-linux-gnu/libcrypto.so /usr/lib/x86_64-linux-gnu/libresolv.so -lm -lcurses

As you can see if you squint, it’s bringing in libmysqlclient.a, the static library.

This affects not only mysql but other executables that MySQL provides. And effects are similar with MariaDB’s source.

MySQL manual says LD_RUN_PATH decides the client .so library

Specifically the Environment Variables” section says in a chart beside LD_RUN_PATH: “Used to specify the location of libmysqlclient.so.” and the “Problems Using the Perl DBI/DBD Interface” section says “Add the path name of the directory where libmysqlclient.so is located to the LD_RUN_PATH environment variable. Some systems use LD_LIBRARY_PATH instead.”

These are not falsehoods but I think they could mislead Linux users.

First, as I mentioned earlier, it doesn’t necessarily apply for what they supply.

Second, as I’ll mention later, on “some systems” — e.g. Linux, eh? — LD_RUN_PATH only has an effect at build time (as when you run gcc) and Linux package developers deprecate it.

Third, as I’ll also mention later, there are several other factors that the dynamic loader will examine though the MySQL manual doesn’t mention them all.

As a client developer I have to pay attention to this advice and look at LD_RUN_PATH when calling dlopen, but it’s not my favourite advice.

MariaDB library isn’t always the same

I said at the start that “the MariaDB library is libmariadbclient.so or libmariadb.so” but it’s not so simple.

There’s a chance that symlinks will exist between libmysqlclient.so and a MariaDB .so.

There’s also a chance that both libmariadbclient.so and libmariadb.so will be available, and libmariadbclient.so will have a symlink to libmariadb.so which is the real target. However, this is not always the case.

Illustration #1: This is what the latest Debian distro says in the sid libmaria-dev list of x32 files:

/usr/lib/x86_64-linux-gnux32/libmariadb.so /usr/lib/x86_64-linux-gnux32/libmariadbclient.so

I’ve left out the irrelevant stuff. The point is, both .so names are there.

Illustration #2: This is what the MariaDB Connector/C contains after a recent download:

~/connector-c/usr/local/lib/mariadb$ ls libmariadb.a libmariadbclient.a libmariadb.so libmariadb.so.3 plugin

I’ve left out the irrelevant stuff. The point is, only one .so name is there. So usually you’ll have no trouble linking to libmariadbclient.so but it’s not guaranteed any more.

The server can display the .so

To see this you’ll have to run the server with performance_schema on, which is the default for MySQL but not for MariaDB, you’ll have to ask for it.

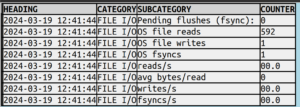

Example pasted from an ocelotgui session:

Notice the name and the version number. But if the library was statically linked, and not an .so, this won’t tell you.

mysql_config can display the .so path

For example,

$ bin/mysql_config --libs -L/home/pgulutzan/mysql-8.3.0-linux-glibc2.28-x86_64/lib -Wl,-R,/home/pgulutzan/mysql-8.3.0-linux-glibc2.28-x86_64/lib -L/home/pgulutzan/mysql-8.3.0-linux-glibc2.28-x86_64/lib/private -Wl,-R,/home/pgulutzan/mysql-8.3.0-linux-glibc2.28-x86_64/lib/private -lmysqlclient -lpthread -ldl -lssl -lcrypto -lresolv -lm -lrt

And mariadb_config would do something similar. It is correct on my machine that the first specified directory indeed contains libmysqlclient.so.

If you follow MySQL’s instructions exactly in section “Building C API Client Programs” this is what you can expect.

But if the client program chooses a different path, this won’t tell you.

Linux utilities can display the .so and the .so path

As well as the programs that come with MySQL or MariaDB that show .so files, there are programs that come with Linux that show .so files. They work with any MySQL or MariaDB executable, but in this section I’ll show examples with common utilities instead because they’re shorter.

I admit that I’m depending on common Linux and ELF format for the examples, if it had to be general I’d perhaps think that libbfd would help me, but I’ve never seen the need.

FIND!

Of course one of the programs is the simple

sudo find / -name “libmy*.so*”

or

sudo find / -name “libmaria*.so*”

but it takes too long and it doesn’t show the files in the context of the caller. So the value is small.

LD!

For example

ld –verbose | grep SEARCH_DIR

SEARCH_DIR("=/usr/local/lib/x86_64-linux-gnu"); SEARCH_DIR("=/lib/x86_64-linux-gnu");

SEARCH_DIR("=/usr/lib/x86_64-linux-gnu"); SEARCH_DIR("=/usr/lib/x86_64-linux-gnu64");

SEARCH_DIR("=/usr/local/lib64"); SEARCH_DIR("=/lib64"); SEARCH_DIR("=/usr/lib64");

SEARCH_DIR("=/usr/local/lib"); SEARCH_DIR("=/lib"); SEARCH_DIR("=/usr/lib");

SEARCH_DIR("=/usr/x86_64-linux-gnu/lib64"); SEARCH_DIR("=/usr/x86_64-linux-gnu/lib");

… These are some standard system paths that ld the GNU linker will look at on a multiarch machine. But they are not necessarily what the dynamic loader will look at so the value is small.

LDD!

For example

$ ldd ./mysql

linux-vdso.so.1 (0x00007fffd2772000)

libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007f23b3b8b000)

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007f23b3b85000)

libssl.so.3 => /home/pgulutzan/mysql-8.3.0-linux-glibc2.28-x86_64/bin/./../lib/private/libssl.so.3 (0x00007f23b38dd000)

libcrypto.so.3 => /home/pgulutzan/mysql-8.3.0-linux-glibc2.28-x86_64/bin/./../lib/private/libcrypto.so.3 (0x00007f23b32a5000)

libresolv.so.2 => /lib/x86_64-linux-gnu/libresolv.so.2 (0x00007f23b3289000)

librt.so.1 => /lib/x86_64-linux-gnu/librt.so.1 (0x00007f23b327f000)

libncurses.so.6 => /lib/x86_64-linux-gnu/libncurses.so.6 (0x00007f23b3254000)

libtinfo.so.6 => /lib/x86_64-linux-gnu/libtinfo.so.6 (0x00007f23b3224000)

libstdc++.so.6 => /lib/x86_64-linux-gnu/libstdc++.so.6 (0x00007f23b3042000)

libm.so.6 => /lib/x86_64-linux-gnu/libm.so.6 (0x00007f23b2ef3000)

libgcc_s.so.1 => /lib/x86_64-linux-gnu/libgcc_s.so.1 (0x00007f23b2ed8000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f23b2ce6000)

/lib64/ld-linux-x86-64.so.2 (0x00007f23b3bcc000)

Most utilities have a man page like this one.

STRACE!

For example, if you have ocelotgui,

strace ocelotgui 2>/dev/stdout| grep openat

will display

openat(AT_FDCWD, "/usr/mysql/lib/tls/x86_64/x86_64/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory) openat(AT_FDCWD, "/usr/mysql/lib/tls/x86_64/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory) openat(AT_FDCWD, "/usr/mysql/lib/tls/x86_64/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory) openat(AT_FDCWD, "/usr/mysql/lib/tls/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory) openat(AT_FDCWD, "/usr/mysql/lib/x86_64/x86_64/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory) openat(AT_FDCWD, "/usr/mysql/lib/x86_64/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory) openat(AT_FDCWD, "/usr/mysql/lib/x86_64/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory) openat(AT_FDCWD, "/usr/mysql/lib/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory) openat(AT_FDCWD, "/usr/lib/tls/x86_64/x86_64/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory) openat(AT_FDCWD, "/usr/lib/tls/x86_64/libdl.so.2", O_RDONLY|O_CLOEXEC) = -1 ENOENT (No such file or directory)

… And so on, for failed attempts to open .so files. The failures are expected, by the way. Subdirectory tls (thread local storage) tends to be absent.

LTRACE!

Example:

$ ltrace cp

strrchr("cp", '/') = nil

setlocale(LC_ALL, "") = "/usr/share/locale"

textdomain("coreutils") = "coreutils"

__cxa_atexit(0x560a7dde6ce0, 0, 0x560a7ddfe008, 0x736c6974756572) = 0

is_selinux_enabled(0x478e4b21b3007f54, 0x7fd612321ca0, 32, 0) 0

geteuid() = 1000

getenv("POSIXLY_CORRECT") = nil

… and so on for all the system calls that cp makes till it’s done. This could find calls that open libraries but the value is small.

READELF!

For example:

$ readelf -a /usr/bin/mariadb | grep NEEDED 0x0000000000000001 (NEEDED) Shared library: [libreadline.so.5] 0x0000000000000001 (NEEDED) Shared library: [libncurses.so.6] 0x0000000000000001 (NEEDED) Shared library: [libtinfo.so.6] 0x0000000000000001 (NEEDED) Shared library: [libpthread.so.0] 0x0000000000000001 (NEEDED) Shared library: [libssl.so.1.1] 0x0000000000000001 (NEEDED) Shared library: [libcrypto.so.1.1] 0x0000000000000001 (NEEDED) Shared library: [libz.so.1] 0x0000000000000001 (NEEDED) Shared library: [libdl.so.2] 0x0000000000000001 (NEEDED) Shared library: [libstdc++.so.6] 0x0000000000000001 (NEEDED) Shared library: [libc.so.6]

(Remember the MariaDB library is statically linked so it doesn’t appear here.)

OBJDUMP!

For example:

$ objdump -p /usr/bin/mariadb | grep NEEDED NEEDED libreadline.so.5 NEEDED libncurses.so.6 NEEDED libtinfo.so.6 NEEDED libpthread.so.0 NEEDED libssl.so.1.1 NEEDED libcrypto.so.1.1 NEEDED libz.so.1 NEEDED libdl.so.2 NEEDED libstdc++.so.6 NEEDED libc.so.6

This objdump list is the same as the readelf list. You can read stories like readelf vs. objdump: why are both needed to know you don’t need both.

LD_DEBUG!

Now for examples I’ll need any program that happens to use the dynamic loader, /bin/cp will do.

Start with the readelf utility to see what a program’s dynamic loader is.

Example:

$readelf -l /bin/cp | grep interpreter

[Requesting program interpreter: /lib64/ld-linux-x86-64.so.2]

Okay, in this case the dynamic loader is /lib64/ld-linux-x86-64.so.2. (The same name appears in the earlier ldd example above but I think that’s undependable.) It differs depending on the platform and the program, so always check.

First make sure that all LD_ environment variables are unset. Then:

LD_DEBUG=libs /lib64/ld-linux-x86-64.so.2 –inhibit-cache /bin/cp 2>/dev/stdout | grep search

You’ll see something like

28805: find library=libselinux.so.1 [0]; searching

28805: search path=/lib/x86_64-linux-gnu/tls/x86_64/x86_64:

/lib/x86_64-linux-gnu/tls/x86_64:/lib/x86_64-linux-gnu/tls/x86_64:

/lib/x86_64-linux-gnu/tls:/lib/x86_64-linux-gnu/x86_64/x86_64:/lib/x86_64-linux-gnu/x86_64:

/lib/x86_64-linux-gnu/x86_64:/lib/x86_64-linux-gnu:

/usr/lib/x86_64-linux-gnu/tls/x86_64/x86_64:/usr/lib/x86_64-linux-gnu/tls/x86_64:

/usr/lib/x86_64-linux-gnu/tls/x86_64:/usr/lib/x86_64-linux-gnu/tls:

/usr/lib/x86_64-linux-gnu/x86_64/x86_64:/usr/lib/x86_64-linux-gnu/x86_64:

/usr/lib/x86_64-linux-gnu/x86_64:/usr/lib/x86_64-linux-gnu:/lib/tls/x86_64/x86_64:

/lib/tls/x86_64:/lib/tls/x86_64:/lib/tls:/lib/x86_64/x86_64:/lib/x86_64:/lib/x86_64:

/lib:/usr/lib/tls/x86_64/x86_64:/usr/lib/tls/x86_64:/usr/lib/tls/x86_64:

/usr/lib/tls:/usr/lib/x86_64/x86_64:/usr/lib/x86_64:/usr/lib/x86_64:/usr/lib

(system search path)

… and so on for all the .so files of cp.

LDCONFIG!

For example,

$ /sbin/ldconfig -p | grep -e 'libmysql' -e 'libmaria'

libmysqlclient.so.21 (libc6,x86-64) => /lib/x86_64-linux-gnu/libmysqlclient.so.21

libmarias3.so (libc6,x86-64) => /lib/x86_64-linux-gnu/libmarias3.so

libmariadbd.so.19 (libc6,x86-64) => /lib/x86_64-linux-gnu/libmariadbd.so.19

libmariadbd.so (libc6,x86-64) => /lib/x86_64-linux-gnu/libmariadbd.so

libmariadb.so.3 (libc6,x86-64) => /lib/x86_64-linux-gnu/libmariadb.so.3

libmariadb.so (libc6,x86-64) => /lib/x86_64-linux-gnu/libmariadb.so

liblibmysql_client.so (libc6,x86-64) => /lib/x86_64-linux-gnu/liblibmysql_client.so

Vaguely speaking, sudo make install can change /etc/ld.so.conf and ldconfig can take from /etc/ld.so.conf to the cache and ldconfig -p can show what’s in the cache. This is good for administrator-approved libraries, as opposed to ones that have been installed in a non-system local directory.

DPKG OR WHATPROVIDES!

For example,

$ dpkg -S libmysqlclient.so libmysqlclient21:amd64: /usr/lib/x86_64-linux-gnu/libmysqlclient.so.21 libmysqlclient18: /usr/lib/x86_64-linux-gnu/libmysqlclient.so.18 libmysqlclient21:amd64: /usr/lib/x86_64-linux-gnu/libmysqlclient.so.21.2.41 libmariadb-dev-compat:amd64: /usr/lib/x86_64-linux-gnu/libmysqlclient.so

The dpkg package manager can be on Debian-based distros. On rpm-based distros the equivalent is whatprovides. This is telling you what is supposed to be there, as opposed to what is actually there.

All of the above utilities are fine for their purposes but I’m after something different — a function I can call to find libraries at runtime, loaded or unloaded. I’ll describe the solution at the end of this blog post.

There are ways to force the path

The usual dynamic loader (often on /lib64/ld-linux-x86-64.so.2 or /lib/ld-linux.so.2 but read the previous section to check) is going to search in this order:

LD_AUDIT — environment variable

LD_PRELOAD — environment variable

DT_RPATH — set by -Wl non-default option

LD_LIBRARY_PATH — environment variable

DT_RUNPATH — set by -Wl default option or LD_RUN_PATH environment variable during build

ld.so.cache and defaults.

LD_AUDIT: specialized and difficult. I mention it but don’t expect it.

LD_PRELOAD: I’ve seen this recommended for the MySQL or MariaDB server but for clients it’s probably only a good choice if you need to force a specific .so file, as opposed to a specific path containing .so files. Oracle used to suggest this for their drop-in replacement.

DT_RPATH: set before runtime. With gcc the way to force DT_RPATH is

gcc … -Wl,-rpath,,-rpath,<library_path>,-disable-new-dtag

The -disable-new-dtag is necessary nowadays because DT_RPATH is set with the old tag. With the default new tag, -Wl,-rpath sets DT_RUNPATH instead. In other words, you’ll probably never see this.

LD_LIBRARY_PATH: or actually “ld_library_paths” plural, because you can put multiple paths delimited by colons or semicolons.

DT_RUNPATH: set before runtime. With gcc the way to force DT_RUNPATH is

gcc … -Wl,-rpath,<library_path>,-rpath,<library_path>

or

LD_RUN_PATH=<library_path> gcc … but setting the LD_RUN_PATH environment variable has no effect at runtime.

ld.so.cache: This is a list of the .so files that the dynamic loader will look for by default. It’s visible with

ldconfig -r

Typically the list is changed when you sudo make install something, so although ldconfig’s not the top choice it’s the most reliable one. You know that some package installer thought that the .so in this list is an appropriate one.

You’ll notice that DT_RPATH and DT_RUNPATH don’t get in unless they’re specified before runtime. And if you specify them yourself and then try to make a .deb package, lintian will tell you: they’re deprecated. For another package type, SUSE will tell you they’re forbidden. So they don’t matter so much.

Since environment variables do matter so much, it can be a good idea to check whether they’re already set, for example you put something in .bashrc and forgot. The best way to check environment variables is printenv (not echo). To check all the environment variables that start with LD_, say

printenv | grep LD_

Since environment variables matter so much, it can be a good idea to set them in a canonical way. For example, to say you want the dynamic loader to look in /tmp/A and /tmp/B, say

LD_LIBRARY_PATH=/tmp/A:/tmp/B program-name

and to temporarily turn it off, say

env -u LD_LIBRARY_PATH program-name

This is slightly better than

export LD_LIBRARY_PATH=program-name

because export has a permanent effect rather than an effect specific for program-name, and because export can only set the environment variable to blank rather than getting rid of it. Another thing is that the delimiter between path names is a colon — yes a semicolon would work here but it wouldn’t work with -Wl,-rpath and you might as well have the same delimiter in all possible lists. I didn’t surround the path names with ”s or “”s in the example, but if there’s a $ then their absence could cause confusion. For example:

export LD_LIBRARY_PATH=/$LIB

printenv LD_LIBRARY_PATH

… You’ll see only the / character, because $ is a signal that an environment variable name follows, unless it’s quoted or escaped.

$LIB and $PLATFORM and $ORIGIN

The dynamic loader will replace occurrences of $LIB or $PLATFORM or $ORIGIN within path names.

$LIB: According to a “Linux manual page” and according to

man ld.so

on my computer, “$LIB (or equivalently ${LIB}) This expands to lib or lib64 depending on the architecture (e.g., on x86-64, it expands to lib64 and on x86-32, it expands to lib).”

Hah! Just kidding. Pilot Logic has a page indicating that’s true for what they call “Red Hat Base” and “Mandriva Base” but not for “Debian Base” or “Arch Base” or “Gentoo Base”. (It’s actually the distro’s glibc that’s responsible.) And there might be more differences in the future.

On top of that, if you go back and look at the example of LD_DEBUG use, you’ll see a bunch of /tls (thread local storage) subdirectories. Luckily, although $LIB can expand to include them, they won’t exist.

$PLATFORM: expect something like “x86_64” on Debian-like 64-bit platforms. Hah! Just kidding again. Debian’s “ArchitectureSpecificsMemo” indicates other possibilities.

$ORIGIN: This is supposed to be the path of the program that’s running. I’ve seen complaints that it’s actually the path of the library in the program that’s running, but I haven’t understood the difference. So if you say

/usr/bin/wombat

then the $ORIGIN is /usr/bin. And if you build with

gcc … -Wl,-z,origin,-rpath,./lib,-rpath,\$ORIGIN

that’s what you’ll see — though notice the escaping of the $ here.

How ocelotgui handles it

There’s no reliable way to decide at install time what the client library will be, because the name varies, the location varies, and the appropriate library might depend on the server that ocelotgui is connecting to.

The

CMakeLists.txt for ocelotgui

has, for 64-bit builds,

set(TMP_INSTALL_RPATH "${TMP_INSTALL_RPATH}/usr/mysql/lib:

/usr/lib:/usr/lib/mysql:/usr/local:/usr/local/lib:

/usr/local/lib/mysql:/usr/local/mysql:/usr/local/mysql/lib:

/usr:/usr/lib/x86_64-linux-gnu:/usr/lib64:/usr/lib64/mysql:

/usr/mariadb/lib:/usr/lib/mariadb:/usr/local/lib/mariadb:

/usr/local/mariadb:/usr/local/mariadb/lib:/usr/lib64/mariadb")

which is reasonable.

However, it’s disabled for the .deb or .rpm packages.

By default it looks first for libmysqlclient then libmariadbclient then libmariadb,

but that’s easy to change with command-line options or in the .cfg file.

And if there’s no non-default specification then the standard-loader paths matter.

This means runtime flexibility is required, and that means we have to use dlopen(). Therefore ldd ocelotgui will tell you nothing, but in this case it’s because we’re looking everwhere, which is quite different from the mysql/mariadb client case because they’re bringing in a .a library.

Finally, Help|About and Help|libmysqlclient will say more about what’s actually connected or could be.

The ocelotgui GUI for MySQL and MariaDB is still version 2.5, which means it hasn’t kept up with recent changes of the servers.

There has to be a new version that takes into account the points that I’ve described here. It will incorporate the code of a new github project: pgfindlib.

pgfindlib

I’ve created a small new GitHub project, pgfindlib. It has major advantages over all the things I’ve described in this post, if the objective is to find relevant .so files at runtime, without cruft, from within a program.

I’ll just quote the first bit of its README here:

…

Version 0.9.7

The pgfindlib function finds dynamic libraries (.so files) in the order the loader would find them.

Copyright (c) 2025 by Peter Gulutzan. All rights reserved.

What pgfindlib is good for

Knowing what .so files the loader would load, but loading them yourself with dlopen(), means you can customize at runtime.

Or, as part of –help you can tell users what the loader picked up, and from where, and what choices it ignored.

An example: Using files supplied with the package:

mkdir /tmp/pgfindlib_example echo "Dummy .so" >> /tmp/pgfindlib_example/libutil.so gcc -o main main.c pgfindlib.c -Wl,-rpath,/tmp/pgfindlib_example LD_LIBRARY_PATH='/$LIB' ./main 'where libutil.so, libcurl.so, libgcc_s.so'

The result might look like this:

1,,,002 pgfindlib,001 version 0.9.7,003 https://github.com/pgulutzan/pgfindlib,, 2,,,005 $LIB=lib/x86_64-linux-gnu,006 $PLATFORM=x86_64,007 $ORIGIN=/home/pgulutzan/pgfindlib,, 3,,,012 in source LD_LIBRARY_PATH replaced /$LIB with /lib/x86_64-linux-gnu,,,, 4,/lib/x86_64-linux-gnu/libcurl.so,LD_LIBRARY_PATH,013 symlink,,,, 5,/lib/x86_64-linux-gnu/libcurl.so.4,LD_LIBRARY_PATH,013 symlink,,,, 6,/lib/x86_64-linux-gnu/libcurl.so.4.6.0,LD_LIBRARY_PATH,,,,, 7,/lib/x86_64-linux-gnu/libgcc_s.so.1,LD_LIBRARY_PATH,,,,, 8,/lib/x86_64-linux-gnu/libutil.so,LD_LIBRARY_PATH,013 symlink,,,, 9,/lib/x86_64-linux-gnu/libutil.so.1,LD_LIBRARY_PATH,013 symlink,,,, 10,/tmp/pgfindlib_example/libutil.so,DT_RUNPATH,071 elf read failed,,,, 11,/lib/libgcc_s.so.1,ld.so.cache,,,,, 12,/lib/x86_64-linux-gnu/libcurl.so,ld.so.cache,013 symlink,014 duplicate of 4,,, 13,/lib/x86_64-linux-gnu/libcurl.so.4,ld.so.cache,013 symlink,014 duplicate of 5,,, 14,/lib/x86_64-linux-gnu/libgcc_s.so.1,ld.so.cache,014 duplicate of 7,,,, 15,/lib/x86_64-linux-gnu/libutil.so,ld.so.cache,013 symlink,014 duplicate of 8,,, 16,/lib/x86_64-linux-gnu/libutil.so.1,ld.so.cache,013 symlink,014 duplicate of 9,,, 17,/lib32/libgcc_s.so.1,ld.so.cache,075 elf machine does not match,,,, 18,/lib32/libutil.so.1,ld.so.cache,013 symlink,075 elf machine does not match,,, 19,/lib/libgcc_s.so.1,default_paths,014 duplicate of 11,,,, 20,/usr/lib/libgcc_s.so.1,default_paths,014 duplicate of 11,,,, rval=0

This means: the loader would look first in /lib/x86_64-linux-gnu because of LD_LIBRARY_PATH. This takes precedence over DT_RUNPATH, which is where the first occurrence of libutil.so appears (this appears because of the -rpath option in the gcc command). Finally there are some .so libraries in ld.so.cache and the system libraries, which is where the loader would go if there was no prior. But some of the lines contain warnings, for example “071 elf read failed” because /tmp/pgfindlib_example/libutil.so is not a loadable file, or for example “075 elf machine does not match” because main is 64-bit and /lib32/libgcc_s.so.1 is 32-bit.

That’s all you need to know in order to decide if you’re interested. If you are, read on, there are many options and a few warnings.

…

Yes, the input is [FROM clause] WHERE clause, the output is table.

Now go over to the pgfindlib repository https://github.com/pgulutzan/pgfindlib and clone it. Since you’ve read all this, you’ll want that.

Column Order in SQL Tables

Read The language rules we know – but don’t know we know which says “Adjectives in English absolutely have to be in this order: opinion-size-age-shape-colour-origin-material-purpose Noun.” Then you’ll know this is correct:

CREATE TABLE little_girls (

grade FLOAT, height SMALLINT, birth DATE, fatness BLOB,

hair SET('blond','black'), previous_school VARCHAR(64),

is_made_of_sugar_and_spice_and_everything_nice BOOL,

reason_for_existence TEXT DEFAULT 'thank heaven');

because columns are attributes and so are adjectives.

Now I’ll tell you the other factors that affect whether chicken columns precede egg columns.

Primary key columns first

As the Oracle “Ask Tom” guy said unenthusiastically in 2012: “For example – most people – pretty much all people – would put the primary key FIRST in the table. It is a universally accepted approach – convention.” Sometimes this is part of a “rule of thumb”. Sometimes it’s part of “logical data modeling standards”. Sometimes it’s part of “business rules”.

I’ve even seen an “automated analysis of database design” tool that marks you down if you put the primary key somewhere else.

General before specific

The United States Postal Service says “Automated mail processing machines read addresses on mailpieces from the bottom up and will first look for a city, state, and ZIP Code.” because those folks know that what matters first is the larger area and what matters last is the street spot.

That’s also what people care about so often they’ll say

genus before species,

or schema name before object name,

or test type before test result.

Specificity is not the same as uniqueness — there might be more addresses “100 Main Street” then there are states in the USA. But it is about moving from the target to the bullseye. Unique-first might be an advantage for CREATE INDEX, but not for CREATE TABLE. And that might mean you might want to say CREATE TABLE t (column1, column2 PRIMARY KEY (column2, column1)) but it does not mean you want to reverse the order of display.

Surname first

Looking at the States stats again, there are about 5 million occurrences of the most common given name (James) and about 2.5 million occurrences of the most common last name (Smith). That’s anecdotal but corresponds to the general belief that last names are uniquer than first names. What matters more is that the name James is less useful for identification, and therefore less important.

Alphabetical

If you’ve ever heard “Choose one from column A and one from Column B” you’ll feel this isn’t quite absurd, and if you’ve got 26 columns named column_a through column_z then you’ll appreciate it if there’s an arbitrary-but-familiar way to glance through them.

And in fact it’s not arbitrary if your column names have prefixes that indicate what group they belong to. Surely user_name and user_address belong together, or if it’s the address suffix that matters more then alphabetical DESC would work though I’ve never seen it proposed.

Important before trivial

There’s advice that columns should appear in order of importance, and if users don’t know what’s important then there are clues.

Is the value usually the same or usually NULL? Then it carries little information.

Is the column name absent from most SELECT statements? Then most people don’t care.

Is it indexed, or unique, or in a foreign key? Then somebody has already decided it matters. Accept that and bump them closer to the start.

What matters for SELECT * is:

the columns that you like most should be on the left of the screen (even if you’re Arab) so you don’t need to use horizontal scrolling.

What matters for SELECT column_x is:

if the row is so big that it might stretch over two blocks, the DBMS might be happier if the column is in the first block.

The ancient wisdom

There’s a rule that I’ve seen often, with occasional variations:

“Primary key columns first.

Foreign key columns next.

Frequently searched columns next.

Frequently updated columns later.

Nullable columns last.

Least used nullable columns after more frequently used nullable columns.

Blobs in own table with few other columns.”

What I’ve seen much less often is a link to what appears to be the first occurrence: https://community.hpe.com/t5/operating-system-hp-ux/oracle-columns/td-p/2840825. It’s from 2002 and it’s in response to a question about Oracle.

So, for example, if your DBMS happens to be one that doesn’t store NULLs if they’re the last things in the row, then that’s good advice. If on the other hand your DBMS happens to be one that has a preface of bits indicating whether the column is NULL, then it’s pointless. Documentation about this might for one InnoDB case be slightly misleading; however, my point is that most of those items will depend on your DBMS and the clauses that you use for a storage engine.

Nevertheless I’ll endorse the “NULLs last” tip because I’ve seen an old claim that it affects SQL Server too. That is, if it’s been a rule for more than one DBMS, even if it’s pointless, maybe it affects compatibility because others still think it’s real.

Old SQL Server rules, for what they’re worth, also include “fixed fields first” and “short before long” but they’re obsolete. Also if you’ve got InnoDB’s compact format then lengths of variable columns are in the header. So when you see claims that you have to journey through the varchar columns till you reach the char columns, be skeptical.

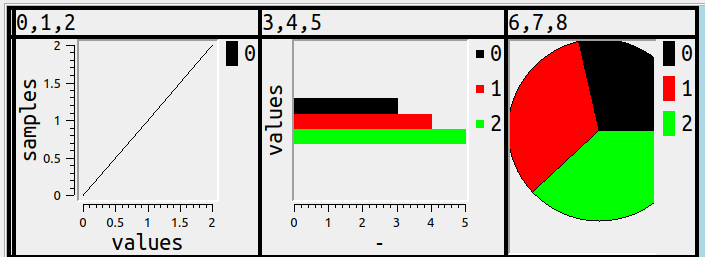

Autocompletion

Suppose in the past you said

CREATE TABLE n (s1 INT, s2 INT);

and now (after rehash) you start an INSERT statement:

INSERT INTO n (

at this point the ocelotgui autocompleter will present you with a hint and you can save a fraction of a second by selecting the first item so you don’t have to navigate.

Unfortunately you won’t save anything when the statement is SELECT, though, because the SELECT list comes before the FROM clause. That is, the GUI won’t know what table you’re using until you’ve already specified the columns.

Speaking of irritating design, I take this opportunity to mention the syntax:

INSTALL PLUGIN plugin_name SONAME 'plugin_library';

Notice again how the GUI can’t hint about a name at the appropriate point, because it won’t know the library yet. I class this as a violation by the vendor of the advice “general before specific”.

Compression

If there is a “run” of bytes with the same value, then a “run-length encoding” (RLE) algorithm might save space. Thus if if it is likely that 20 columns will all contain 0, then keeping them together is a good idea.

But it’s only an idea. The practical considerations are that (a) there’s no compression (b) the typical compression is some variant of Lempel-Ziv which wouldn’t require the columns to be exactly contiguous, (c) it’s hard to predict what algorithms and byte placements will actually save the most space without trying them all (d) they’re not all available unless you build from source.

Consistency

Consistency between tables: if table #1 has columns in a particular order, then table #2 should too. Imitate the INFORMATION_SCHEMA tables because they are likely to be static, although the collations of string columns are likely to change. I’d add “imitate examples in the manual”, but only if the examples in the manual are themselves consistent. For example the MySQL manual example

CREATE TABLE pet (name VARCHAR(20), owner VARCHAR(20),

species VARCHAR(20), sex CHAR(1), birth DATE, death DATE);

has no explicit primary key, but the columns might be in order of importance and the final column is the one most likely to contain NULL. For example the MariaDB manual example

CREATE TABLE test.accounts ( id INT PRIMARY KEY AUTO_INCREMENT, first_name VARCHAR(25), last_name VARCHAR(25), email VARCHAR(100), amount DECIMAL(15,2) CHECK (amount >= 0.0), UNIQUE (email) ) ENGINE=InnoDB;

has the PRIMARY KEY clause mixed in the column definition but the UNIQUE clause outside the column definition, and has first name before surname.

Consistency between statements: if WHERE clauses often have WHERE a = 5 AND b = 6, or if ORDER BY clauses often have A, B ASC, or if INSERT statements often have (a, b), then the CREATE TABLE definition can serve as a guide by letting users know that a comes before b by design.

Consistency between definitions: if table #1 has one foreign key referencing table #X and then another foreign key referencing table #Y, and table #2 has one foreign key referencing table #y and then another foreign key referencing table #X, then ask yourself why the order of foreign keys in table #2 is not the same as the order of foreign keys in table #1. Ideally the order will be reflected in entity-relationship diagrams.

ocelotgui changes

The ocelotgui 2.4 release is available for download from github.

The debugger feature won’t work with MariaDB 11.5ff for reasons I’ll explain elsewhere. However, if you download the source and build, it will work. Or wait for ocelotgui 2.5 which (I hope) will be released soon.

Make SHOW as good as SELECT even with InnoDB

Here’s a snippet of what I’d like SHOW ENGINE INNODB STATUS to look like:

as opposed to what the server sends:

... -------- FILE I/O -------- Pending flushes (fsync): 0 295 OS file reads, 1 OS file writes, 1 OS fsyncs 0.00 reads/s, 0 avg bytes/read, 0.00 writes/s, 0.00 fsyncs/s ...

In my last post I described a way to

Make SHOW as good as SELECT”

which was possible because most result sets from SHOW etc. are at least table-like, but

STATUS’s wall of text isn’t table-like. So I forced it into a table with these basic rules:

- A line inside ——s, like FILE I/O, is the category of what follows.

- Otherwise a line is a row but if it contains commas it is multiple rows.

- Numbers can usually be extracted from text as different columns..

After that there’s still a bunch of fiddling, I put the details in source-code comments.

Version 2.3

The new features related to SHOW etc. are now in a released version as well as in source code,

downloadable from github.

In the rest of this post I’ll show a complete result from SHOW ENGINE INNODB STATUS; (“before”),

and the same data from SHOW ENGINE INNODB STATUS WHERE 1 > 0; after ocelot_statement_syntax_checker

has been set to ‘7’ (“after”). (Instead of copying the Grid Widget I copied from the History Widget

after setting Max Row Count to 100.)

“before”

| InnoDB | | ===================================== 2024-03-19 12:39:45 0x7f80f01f3700 INNODB MONITOR OUTPUT ===================================== Per second averages calculated from the last 43 seconds ----------------- BACKGROUND THREAD ----------------- srv_master_thread loops: 0 srv_active, 2455 srv_idle srv_master_thread log flush and writes: 2454 ---------- SEMAPHORES ---------- ------------ TRANSACTIONS ------------ Trx id counter 754 Purge done for trx's n:o < 0 undo n:o < 0 state: running but idle History list length 0 LIST OF TRANSACTIONS FOR EACH SESSION: ---TRANSACTION (0x7f8111334680), not started 0 lock struct(s), heap size 1128, 0 row lock(s) ---TRANSACTION (0x7f8111333b80), not started 0 lock struct(s), heap size 1128, 0 row lock(s) -------- FILE I/O -------- Pending flushes (fsync): 0 295 OS file reads, 1 OS file writes, 1 OS fsyncs 0.00 reads/s, 0 avg bytes/read, 0.00 writes/s, 0.00 fsyncs/s --- LOG --- Log sequence number 463193 Log flushed up to 463193 Pages flushed up to 362808 Last checkpoint at 362808 ---------------------- BUFFER POOL AND MEMORY ---------------------- Total large memory allocated 167772160 Dictionary memory allocated 853016 Buffer pool size 8064 Free buffers 7647 Database pages 417 Old database pages 0 Modified db pages 164 Percent of dirty pages(LRU & free pages): 2.033 Max dirty pages percent: 90.000 Pending reads 0 Pending writes: LRU 0, flush list 0 Pages made young 0, not young 0 0.00 youngs/s, 0.00 non-youngs/s Pages read 273, created 144, written 0 0.00 reads/s, 0.00 creates/s, 0.00 writes/s No buffer pool page gets since the last printout Pages read ahead 0.00/s, evicted without access 0.00/s, Random read ahead 0.00/s LRU len: 417, unzip_LRU len: 0 I/O sum[0]:cur[0], unzip sum[0]:cur[0] -------------- ROW OPERATIONS -------------- 0 read views open inside InnoDB state: sleeping ---------------------------- END OF INNODB MONITOR OUTPUT ============================ |

“after”

/

Make SHOW as good as SELECT

For example, this works:

SHOW AUTHORS GROUP BY `Location` INTO OUTFILE 'tmp.txt';

You’re thinking “Hold it, MySQL and MariaDB won’t allow SHOW (and similar statements like ANALYZE or CHECK or CHECKSUM or DESCRIBE or EXPLAIN or HELP) to work with the same clauses as SELECT, or in the same places.” You’re right — but they work anyway. “Eppur si muove”, as Galileo maybe didn’t say.

I’ll explain that the Ocelot GUI client transforms the queries so that this is transparent, that is, the user types such things where SELECTs would work, and gets result sets the same way that SELECT would do them.

Flaws and workarounds

I’ll call these statements “semiselects” because they do what a SELECT does — they produce result sets — but they can’t be used where SELECT can be used — no subqueries, no GROUP BY or ORDER BY or INTO clauses, no way to way to choose particular columns and use them in expressions.

There are three workarounds …

You can select from a system table, such as sys or information_schema or performance_schema if available and if you have the privileges and if their information corresponds to what the semiselect produces.

For the semiselects that allow WHERE clauses, you can use the bizarre “:=” assignment operator, such as

SHOW COLUMNS IN table_name WHERE (@field:=`Field`) > '';

and now @field will have one of the field values.

You can get the result set into a log file or copy-paste it, then write or acquire a program that parses, for example by extracting what’s between |s in a typical ASCII-decorated display.

Those three workarounds can be good solutions, I’m not going to quibble about their merits. I’m just going to present a method that’s not a workaround at all. You just put the semiselect where you’d ordinarily put a SELECT. It involves no extra privileges or globals or file IO.

Example statements

CHECK TABLE c1, m WHERE `Msg_text` <> 'OK'; SELECT * FROM (DESCRIBE information_schema.tables) AS x ORDER BY 1; SHOW COLLATION ORDER BY `Id` INTO OUTFILE 'tmp.txt'; SELECT `Type` FROM (SHOW COLUMNS IN Employees) AS x GROUP BY `Type`; SELECT UPPER(`Name`) from (SHOW Contributors) as x; SHOW ENGINES ORDER BY `Engine`; (SELECT `Name` FROM (SHOW CONTRIBUTORS) AS x UNION ALL SELECT `Name` FROM (SHOW AUTHORS) AS y) ORDER BY 1; CREATE TABLE engines AS SHOW ENGINES;

How does this work?

The client has to see where the semiselects are within the statement. That is easy, any client that can parse SQL can do it.

The client passes each semiselect to the server, and gets back a result, which ordinarily contains field names and values.

The client changes the field names and values to SELECTs, e.g. for SHOW CONTRIBUTORS the first row is

(SELECT 'Alibaba Cloud' AS `Name`, 'https://www.alibabacloud.com' AS `Location`, 'Platinum Sponsor of the MariaDB Foundation' AS `Comment")

and that gets UNION ALLed with the second row, and so on.

The client passes this SELECT to the server, and gets back a result as a select result set.

Or, in summary, what the client must do is: Pass the SHOW to the server, intercept the result, convert to a tabular form, send or SELECT … UNION ALL SELECT …; to the server, display.

However, these steps are all hidden. the user doesn’t have to care how it works.

Limitations

It requires two trips to the server instead of one. The client log will only show the semiselect, but the server sees the SELECT UNION too.

It will not work inside routines. You will have to CREATE TEMPORARY TABLE AS semiselect; before invoking a routine, in order to use the semiselect’s result set inside CREATE FUNCTION | PROCEDURE | TRIGGER.

Speaking of CREATE TEMPORARY TABLE AS semiselect, if there are VARCHAR columns, they will only be as big as the largest item in the result set.

It will not work inside CREATE VIEW.

Sometimes it will not work with nesting, that is semiselects within semiselects might not be allowed.

Some rare situations will expose the SELECT result in very long column names.

Try it today if you can build from source

On Linux this is easy — download libraries that ocelotgui needs, download ocelotgui, cmake, make. (On Windows it’s not as easy, sorry.) The source, and the README instructions for building, are on github.

After you’ve started up ocelotgui and connected to a MySQL or MariaDB server, there is one preparatory step: you have to enable the feature. (It’s not default because these aren’t standard SQL statements.) You can do this by going to the Settings|Statement menu and changing the Syntax Checker value to 7 and clicking OK. Or you can enter the statement

SET OCELOT_STATEMENT_SYNTAX_CHECKER = '7';

Now the feature is enabled and you can try all the examples I’ve given. You’ll see that they all work.

Of course it’s made available this way because the status is beta.

Try it a bit later if you can’t build from source

This will be available in executable form in the next release of ocelotgui, real soon now. If you have a github account, you can go to the github page and click Watch to keep track of updates.

Update: the release happened on 2024-03-18, source and executables are at https://github.com/ocelot-inc/ocelotgui.

Version 2.1

Ocelotgui, the Ocelot Graphical User Interface, version 2.1, is now available for download. The principal new feature is the chart modifications described in the last post, Charts. Packages are for Linux or Windows, compatible DBMSs are MySQL or MariaDB or Tarantool. Get description and instructions and clone source from GitHub Code area, or get the release from GitHub Releases area.

Charts

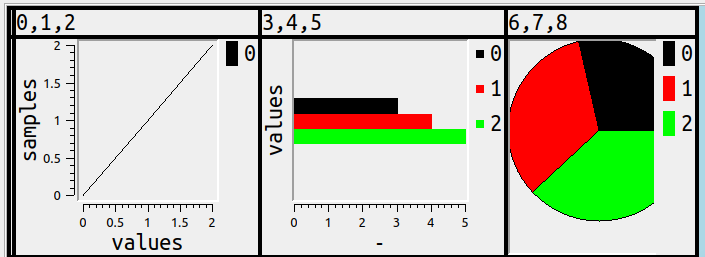

SELECT 0,1,2,3,4,5,6,7,8; result can look like this

but should it?

I’ll try to cover here what would be an obvious way to show number columns in charts, or if not obvious then at least simple, or if not obvious or simple then at least understandable, or if none of the above, well, good looking.

The initial part of this description will look a bit like what you see with DB2 magic commands for bar or line or pie charts, but I like to think the later part of this description shows something more sophisticated.

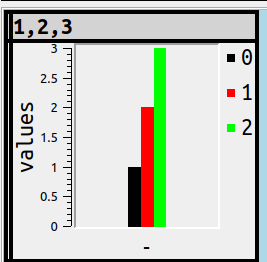

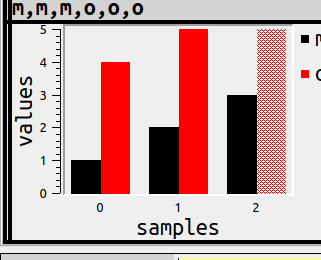

Bars

Start with the most obvious: any group of numbers can be shown as a group of bars.

Should they be horizontal by default? That appears to be the less common choice, A Complete Guide to Bar Charts says “While the vertical bar chart is usually the default …”, ChartJs says “The default for this property is ‘x’ and thus will show vertical bars.”

But a SAS blog 3 reasons to prefer a horizontal bar chart persuaded me because, when we’re a GUI displaying an SQL result set, we usually have more room on the x (width) axis than on the y (height) axis.

Now, as for simpleness: that should mean there’s no extra work or time for exporting, manipulating in a non-SQL environment, learning Python, or even having to think — if the total time including user time is more than a second, that’s a fail. Therefore it has to be possible to get

SELECT 1,2,3;

which ordinarily displays as

to display as

with a shortcut that includes a mnemonic. Therefore hitting Alt+Shift+B should switch immediately to the bar-chart display.

But it can get more complex, and that means users must enter instructions, and the only way to make that simpler is to provide instruction syntax that looks like SQL, thus:

SET ocelot_grid_chart = 'bar vertical';

… since users will know that SET value = literal is the SQL way to assign, and will know that ''s enclose literals, and will know what vertical means. So this is the result and it won’t be surprising:

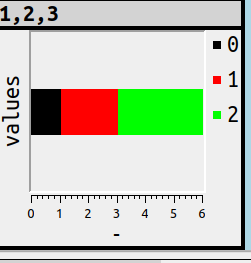

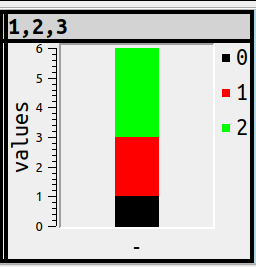

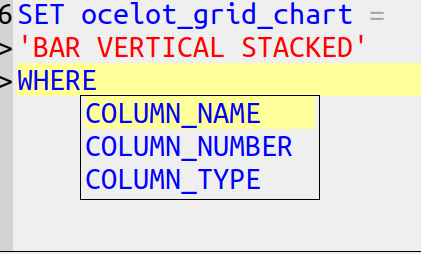

Another bar-chart variant is stacking, that is, putting 3 values in one bar instead of 3 side-by-side bars. For example

SET ocelot_grid_chart = 'bar stacked';

or

SET ocelot_grid_chart = 'bar vertical stacked';

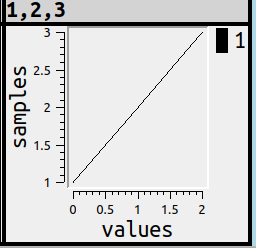

Lines

Lines are a bit less obvious than bars, because if there’s only one number in the series, they’re meaningless. Nevertheless, there should be a simple way to show a chart. Therefore Alt+Shift+L will cause

x

Maybe, although I suppose everyone has seen this sort of thing before, I should make some terminology notes around now …

X-axis: usually this is the horizontal, with the word “samples”

Y-axis: usually this is the vertical, with the word “values”

Legend: in the upper right corner, with a colour icon and a short indicator

Ticks: the tiny marks along the values, so users can see what the scale is.

For the French terms, see Statistique Canada.

But if users don’t know the terms, that’s fine, they understand the picture anyway.

Pies

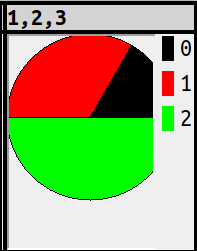

Pies are obvious and simple too, all they require is

Alt+Shift+P or

SET ocelot_grid_chart='pie';

and SELECT 1,2,3; will look like this:

Fine. But putting numbers along the x-axis and y-axis wouldn’t be informative, and nobody has figured out what should happen with negative numbers. I think Microsoft Excel would take the absolute value, I think it makes more sense to ignore, but the point is: no solution is intuitive for all, therefore there’s a gotcha, therefore it’s not obvious and simple after all.

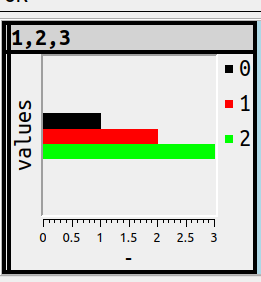

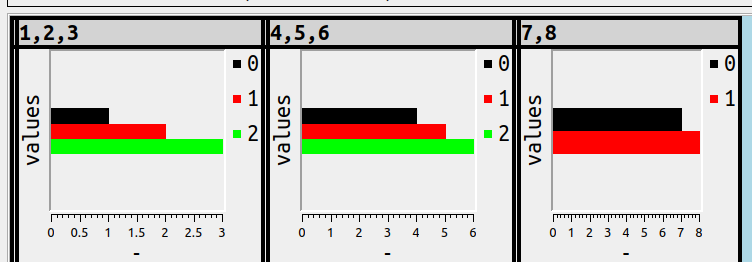

Groups

Every illustration so far has been simple, a 3-number series. There’s no complicated decision-making, the algorithm is just “if it’s a number, i.e. any of the data types that the DBMS fits in a general numeric category, it’s in, otherwise it’s out”.

It would follow, then, that

>SELECT 1,2,3,'',4,5,6,'',7,8,9;

would result in this if the setting was for bar charts:

There are three “groups” here, and “groups” is a better word than “series” because GROUP is an SQL-standard term.

Subgroups

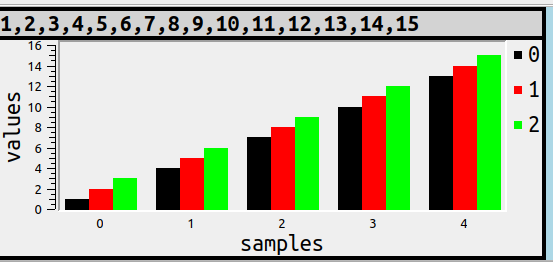

Now things get a bit more complex. What makes us decide that every individual numeric-column occurrence should be part of a separate bar or line or pie segment? Answer: nothing does, that’s simple but not really the best for representing some of the typical chart situations.

Take the possibility that all the columns which have something in common are combined. The commonness could be the value. For example

SET ocelot_grid_chart='bar vertical subgroup by value % 3';

SELECT 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15;

That is, the values which are divisible by 3 are together, but distributed across the landscape. Each of the things that are together are usually called “samples” if one uses chart terminology, and that’s why in this illustration the word “samples” appears at the bottom, and each subgroup has a separate colour, which is echoed by the legend.

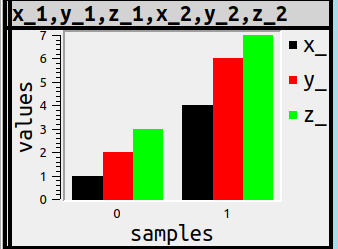

More usefully, I think, the commonness could be the column_name prefix. The usefulness lies in the fact that users know how to put AS clauses after column names in the select list, so they can determine what the subgroups are. For example

SET ocelot_grid_chart='bar vertical subgroup by left(column_name, 1)';

SELECT 1 AS x_1, 2 AS y_1, 3 AS z_1, 4 AS x_2, 6 AS y_2, 7 AS z_2;

There might be other criteria for deciding how to subgroup, but they’re probably less important. What’s important is that once again the syntax is something any SQL user will understand.

Actually the word that chart-lovers prefer here is “categories”, and “subgroups” isn’t really a word you’ll find in typical SQL, but at the moment I’m thinking it’s “SQL-ish”.

Nulls

Since this is SQL, NULLs exist.

There’s no such thing as obvious when NULL might mean unknown or inapplicable, but this isn’t Oracle so it would be too trite to say NULLs are zeros, at least when it’s possible to hint otherwise. Thus

SET ocelot_grid_chart='bar vertical subgroup by left(column_name, 1)';

SELECT 1 AS m, 2 AS m, 3 AS m, 4 AS o, 5 AS o, NULL AS o;

doesn’t use a solid colour, but has the same size as whatever the largest bar is.

I worry that by now we’ve evolved from “obvious” to merely “understandable”, so I’ll explain this example more deeply. In this example there are three samples of ‘m’ and ‘o’. The first sample, label 0, has the first ‘m’ and it has value = 1, the black bar, and it has the first ‘o’ and it has value = 4, the red bar. Sample 1 has the second ‘m’ and the second ‘o’. Sample 2 has the third ‘m’ and ‘o’, and, since the third ‘o’ is NULL, it is not solid. The legend in the right corner is helpful for showing that

black is ‘m’ and red is ‘o’.

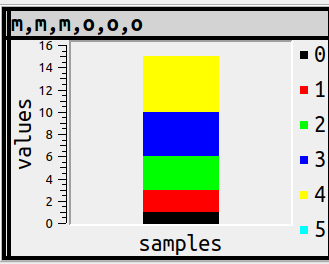

WHERE clauses

But surely not every numeric column should always be in a chart? I think of column names that end with ‘_id’, or the possibility that one will want to display both the chart and the numbers as text.

The SQL syntax for deciding what’s in a group could be CASE … WHEN, or IF … THEN, but what even the novice SQL user has seen is WHERE. The condition could be COLUMN_NAME relative-operator value, COLUMN_NUMBER relative-operator value, COLUMN_TYPE relative-operator value. By the way, since this is a GUI, users will see hints for autocompletion when they type WHERE, for example

and after

SET ocelot_grid_chart='bar vertical stacked' WHERE column_type = 'integer';

SELECT 1,1.1,2,7,-44;

the result will not include 1.1 in a chart because it is not an integer.

And this is where it becomes possible at last to show the statements that caused the example at the start of this post, where some of the columns were in a bar chart, some in a line chart, and some in a pie.

SET ocelot_grid_chart = 'line' WHERE column_number <= 3;

SET ocelot_grid_chart = 'bar' WHERE column_number >= 4 AND column_number <= 6;

SET ocelot_grid_chart = 'pie' WHERE column_number > 6;

SET ocelot_grid_chart = '' WHERE column_number > 100;

SELECT 0,1,2,3,4,5,6,7,8;

and we’ve arrived at the example we started with.

Turning it off

Alt+Shift+N or

SET ocelot_grid_chart='';will erase all earlier chart statements. So there’s a simple way to go back to the regular way to display results.

Source Code

My main purpose describing all this is to show what the design of a GUI should look like, if my observations about goals make sense.

The illustrations show what the implementation looks like as of today, they’re screenshots of ocelotgui, and they work as I’ve described. But currently they’re only distributed in source code. Anyone who wants to reproduce them today can download the source from github, follow the special build instructions in file chart_options.txt, connect to MySQL or MariaDB or Tarantool, and see the same things. You will notice that you need to use the Qwt library, which should be no problem (ocelotgui is GPLv2, Qwt is LGPL, Qwt is on many distros). Pay no attention to the bug in the room.

But I’m not sure whether to call this the final look for the next executable release. Let me know in the comments if I’m missing tricks.

Delimiters in MySQL and MariaDB

In the SQL standard delimiters are for separating tokens; in MySQL/MariaDB they’re for ending statements, particularly compound statements as in

CREATE PROCEDURE p() BEGIN VALUES (5); END;

The problem is that a dumb client could stop at the first semicolon “;” in the statement (the one after VALUES (5)) instead of the one that truly ends (the one after END). The solution is to say that semicolon is no longer the default delimiter, and the way to specify a replacement is with a non-standard DELIMITER statement, such as

DELIMITER //

and after that the true statement end is //.

The idea isn’t unusual — in PostgreSQL’s psql client you can enclose compound statements with $$ which they call dollar quoting, with Microsoft you get used to saying “go” when you want something shipped to the server, with Oracle you might sometimes see SQLTERMINATOR. But it shouldn’t always be necessary — despite the fact that words like BEGIN are not reserved, a client with even a slight ability to recognize syntax can see where a compound statement ends without needing special tags.

Quirks

Some DELIMITER quirks are documented in either the MySQL manual or MariaDB manual, some are common to all mysql-client commands, but all are weird. They are:

() DELIMITER itself is not delimited

() After DELIMITER A B the delimiter is A

() After DELIMITER A; the delimiter is A;

() After DELIMITER 12345678901234567890 the delimiter is 123456789012345

() After DELIMITER ‘x’ or DELIMITER “x” or DELIMITER `x` the delimiter is x

() After DELIMITER ;; the delimiter is ;;

() Delimiters inside comments or character string literals or delimited identifiers are ignored

() After \dA the delimiter is A (\d is a substitute for DELIMITER and no space is necessary)

() After DELIMITER \ the effect can be odd, the MySQL manual recommends not to use \

() SELECT 5; DELIMITER ; will cause an error message

() DELIMITER ;; followed by SELECT 5;;; has a different effect from DELIMITER // followed by SELECT 5;//

() \G and \g will work whether or not there is a delimiter

It is not a gotcha that, when statements are in a .sql file and read by the SOURCE file-name statement, the rules are different: Compound statements will be recognized without special delimiters, and semicolons will always end non-compound statements even after a different delimiter has been specified.

It is not a quirk or a gotcha, it’s merely bad design, that DELIMITER forces the fundamental requirement of a parser — the tokenizer — to change what the tokenizing rules are while the tokenizing is going on.

Best practice

Both the MySQL and the MariaDB manual have example statements where the delimiter is // and that’s unlikely to cause confusion, since the only other uses of / are as a beginning of a bracketed comment or as a division operator, or in arguments to PROMPT or SOURCE or TEE.

Less frequently there is a recommendation for $$ but that is bad because $$ can occur inside regular identifiers.

DELIMITER !! is equally bad because !, unlike /, is a repeatable operator, for example SELECT !!5; returns 1.

DELIMITER ;; is worse and has caused bugs in the past, but the mysqldump utility will generate it before CREATE TRIGGER statements.

Avoid non-printable or non-ASCII characters, some clients will support them but others won’t.

You could set a delimiter at the start of a session and let it persist. But I believe the more common practice is to put DELIMITER [something appropriate] immediately before any CREATE PROCEDURE or CREATE TRIGGER or CREATE FUNCTION or BEGIN NOT ATOMIC statement, and DELIMITER ; immediately after. This might help readability by humans since otherwise they’d look at statements that end with mere semicolons and assume they’d go to the server immediately, not realizing that long ago there was a DELIMITER statement.

See also the Descriptive SQL style guide section “Semicolons”.

ocelotgui

The ocelotgui client doesn’t need DELIMITER statements because obviously a client that includes a stored-procedure debugger can recognize compound statements, but it will accept them because it tries to be compatible with the mysql client. It does not duplicate mysql client behaviour for the SOURCE statement but that should be fixed in the next version, and the fix is now in the source code which can be downloaded from github.

Duplicate column names

A former colleague from my MySQL AB days asked me aboutSELECT pk, SUM(amount) AS pk FROM t ORDER BY pk;

should the duplicate use of the name PK be illegal?

My reply is: A relational-database expert deplores it in the select list; the SQL standard says it’s conceptually illegal in the order-by clause; MySQL and MariaDB handle it in an odd way; the Ocelot GUI for MySQL and MariaDB doesn’t flag it.

A relational-database expert deplores

CJ.Date, in Date On Databases, Writings 2000-2006, has a multi-page section about “Duplicate Column Names”. I’ll just quote the firmest negatives.

… we can avoid the duplicate names if we want to. The problem is, we don’t have to, and SQL does not have to deal with the possibility that a given table might have column names that aren’t unique. As an aside, I should explain that … we don’t have to use the “AS column name” construct is because it wasn’t part of the original standard (it was introduced with SQL:1992), and compatibility therefore dictates that its use has to be optional. (I should also note in passing that — believe it or not — names introduced by AS don’t have to be unique!

*Every SQL table has a left-to-right column ordering *Every column in every named SQL table (i.e. base table or view) has a user-known name, and that name is unique within the table in question. But neither of these properties holds, in general, for unnamed tables (i.e. intermediate and final result tables). … I’d like to point out that duplicate and missing column names both constitute a fairly egregious violation of The Naming Principle

So nothing’s ambiguous about Mr Date’s evaluation, but he is acknowledging that SQL won’t be changing due to compatibility with old standards, and at least it wouldn’t allow duplicate column names in the select list of a CREATE VIEW statement.

The SQL standard says it might be illegal

The standard says this about Concepts:

This Clause describes concepts that are, for the most part, specified precisely in other parts of ISO/IEC 9075. In any case of discrepancy, the specification in the other part is to be presumed correct.

So we usually expect a specific syntax rule, but if it’s missing, we maybe can look at Concepts.

And this is SQL:2016, Foundation, 4.13 Columns, fields, and attributes, in the Concepts section:

Sometimes the Syntax Rules forbid two elements at different ordinal positions from having equivalent names (particularly when created through an SQL schema statement). When two elements with equivalent names are permitted, then the element name is ambiguous and attempts to reference either element by name will raise a syntax error.

That suggests that if pk appears twice in select list then ORDER BY pk; should fail, but it’s not explicitly stated in the Syntax Rules. This in contrast with GROUP BY, which has a clear Syntax Rule, that is, “Each grouping column reference shall unambiguously reference a column of the table resulting from the from clause.” Perhaps when I was on the ANSI committee I should have asked why Syntax Rule for ORDER BY doesn’t also say “unambiguously”, but it’s too late now.

MySQL and MariaDB handle it in an odd way

I tried variations of the syntax with MySQL 8.0.31 and MariaDB 10.10.1, sql_mode=only_full_group_by is not true, sql_mode=ansi_quotes is true. They both return the same results.

CREATE TABLE t (pk INT, amount INT); INSERT INTO t VALUES (1,2), (2,1),(1,2),(2,1); SELECT pk, (amount) AS pk FROM t ORDER BY pk;

Result: Error 1052 (23000) Column ‘pk’ in order clause is ambiguous

SQLSTATE values starting with 23 are for “integrity constraint violation”, so it would be better to have something starting with 42 (syntax error or access violation), I described good old ’42’ in an earlier blog post. However, the error message is good and consistent with standard SQL.

SELECT pk, SUM(amount) AS pk FROM t ORDER BY pk;

Result: 1,6.SELECT pk, 5 AS pk, amount FROM t ORDER BY pk,amount DESC;

Result: (1,5,2),(1,5,2),(2,5,1),(2,5,1)SELECT pk, (SELECT SUM(amount) AS pk FROM t) AS "pk" FROM t ORDER BY pk;

Result: (1,6),(2,6),(1,6),(2,6)

These results are harmless — there’s either an implied grouping or a literal for the main sort, so they’re always the same value, so they can be optimized away. Nevertheless, they’re inconsistent — if “Column ‘pk’ in order clause is ambiguous” is detectable, and it’s a syntax error not a runtime error, it shouldn’t have been ignored.

SELECT pk * - 1 AS pk, pk FROM t ORDER by pk;

Result: (-2,2),(-2,2),(-1,1),(-1,1)SELECT pk, pk * - 1 AS pk FROM t ORDER by pk;

Result: (2,-2),(2,-2),(1,-1),(1,-1)

These results are odd, they show that we’re ordering by the pk that has an AS clause, regardless of which column is first in the select list.

SELECT SUM(amount) AS pk, pk FROM t GROUP BY pk ORDER BY pk;

Result: (2,2),(4,1)

Also there is a warning: Column ‘pk’ in group statement is ambiguous. But it does succeed, and once again the item with the AS clause is preferred. This syntax is explicitly declared illegal in standard SQL.

SELECT * FROM (SELECT 1 AS a, 1 AS a) AS t1, (SELECT 1 AS a, 1 AS a) AS t2;

Result: Error 1060 (42S21) Duplicate column name ‘a’

This is the right result, and the SQLSTATE is better because it starts with ’42’.

The reason it’s correct is that Trudy Pelzer long ago reported a bug with duplicate names in subqueries, which are illegal due to a different item in the standard, and Oleksandr Byelkin fixed it.

The Ocelot GUI for MySQL and MariaDB doesn’t flag it

An obvious thought is: since ocelotgui recognizes MySQL/MariaDB syntax, and gives hints or autocompletions while the user is entering a statement, should I be adding code to catch this? After all, we stop most illegal syntax before it goes to the server.

But I’m wary. The behaviour that I describe here is undocumented, so it could change without warning, and besides who are we to reject what the server would accept? It won’t be at the top of the “To Do” pile.

Speaking of “To Do” piles, ocelotgui 1.8 has now been released, and is downloadable from github.

ocelotgui version 1.7

Version 1.7 of ocelotgui has these changes.

Bug fix: due to a recent MariaDB change, our stored-procedure debugger could not be installed when connecting to a MariaDB server (MySQL debugging was not affected). Now all is well again.

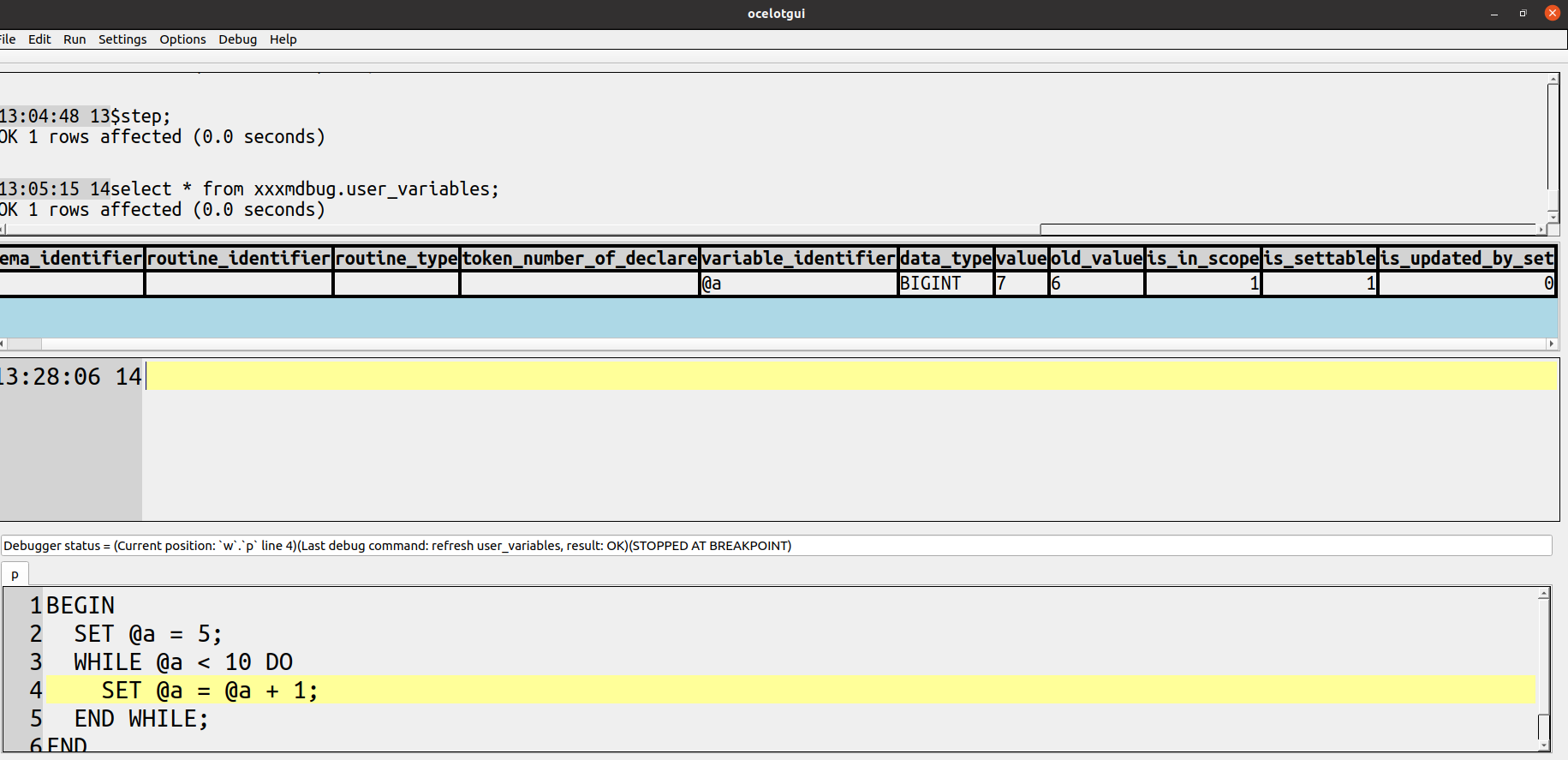

Here, for example, is a screenshot of ocelotgui after connecting to MariaDB 10.8, saying $debug P; and then Alt+5 (“Step”) 4 times, then clicking menu item “Refresh user variables” to see the previous and current values of @a:

/

Incidentally I’ve just noticed some old comments about ocelotgui on https://stackoverflow.com/questions/3007625/mysql-stored-procedure-debugging with one user saying “… helped me a lot!” and another saying “I connected, debugged their tutorial, and am amazed. it’s a GREAT step debugger with variable data view on demand. lots to learn, but the #1 choice so far for free.” Don’t get the wrong idea though — debugging is a unique feature but not the major one.

New platform: Actually ocelotgui runs on any Linux distro (and Windows of course); however, the only specific instructions for creating packages were ones for .deb (Debian-based) and .rpm (Red-Hat-based). Now there is a script file for creating packages on Arch-Linux-based distros too, for example Manjaro or EndeavourOS. It’s PKGBUILD.

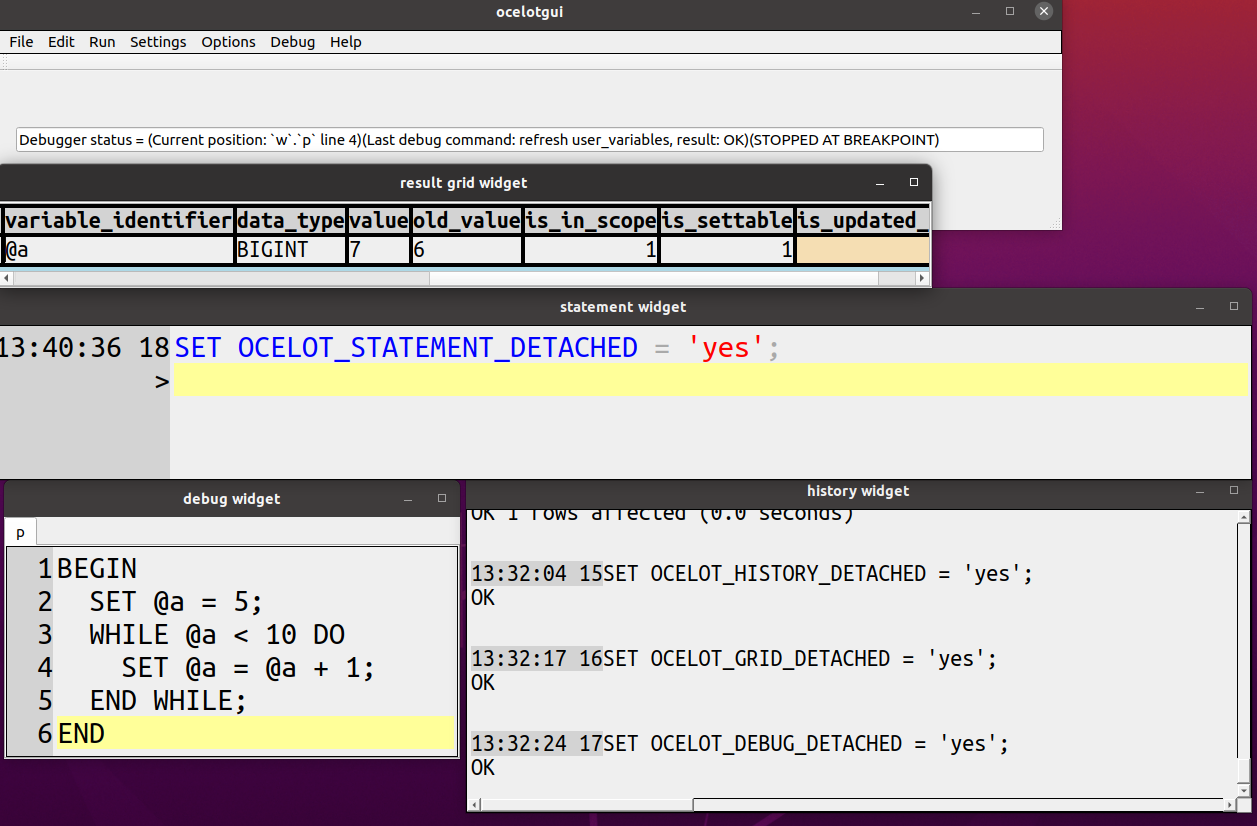

Enhancement: Detaching wasn’t quite as spiffy as it should be because our size calculations were slightly wrong. Here’s a screenshot showing the same widgets as before, after detaching them all and doing some manual fiddling:

As usual, downloads and executables are available on our github repository.

Autocompletion with the mysql client

The concept of autocompletion is old and simple. You type some characters; the program shows some choices for what characters might follow; you can choose a choice by hitting a special key. It can save on typing, and save on looking up in dictionaries or information schemas.

I will illustrate what the manuals mean, then show features or quirks that the manuals fail to say, then compare with a GUI.

The Example

For The Example, I used MySQL 8.0 mysql client and MariaDB 10.5 mysql client, on Linux. I understand that they work on Windows 10 if you install a Linux shell.

You should find it easy to follow along with your own copy of mysql client, even if you started with non-default options and have minimal privileges.

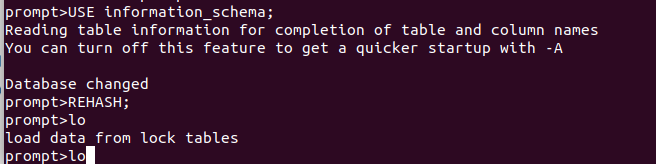

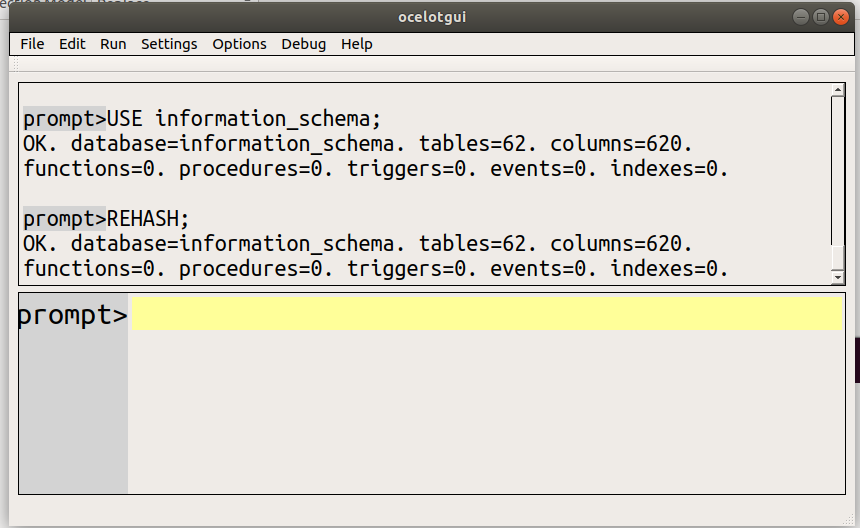

The first thing to do is declare a “current database” with USE, and say that you want to enable autocompletion with REHASH.

I’ll discuss later why these might be unnecessary statements, but there’s no harm in making sure.

USE information_schema; REHASH;Now type

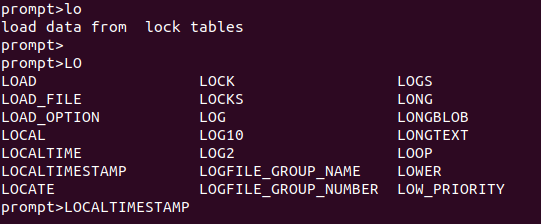

lo then type the [Tab] key twice. Your screen will look like this:

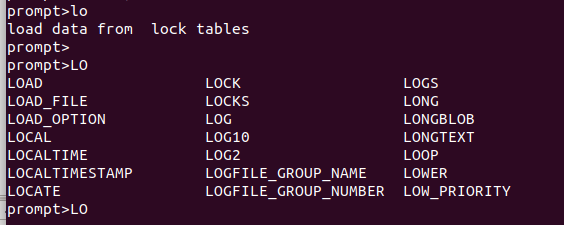

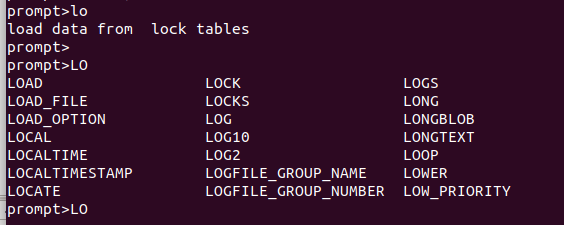

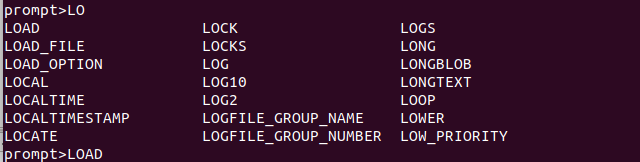

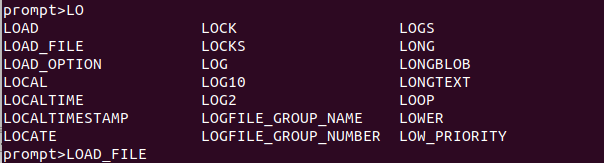

Now type the [Backspace] key twice, then type LO, then type the [Tab] key twice. Your screen will look like this (the items might be in a different order depending on the server):

Now type the [Backspace] key twice, then type LOCALTIMEST, then type the [Tab] key once. Your screen will look like this:

At this point you have probably figured out these things:

* Searching is case sensitive, lo and LO are different

* If there is more than one choice, [Tab] [Tab] causes a display

* If there is only one choice, [Tab] causes a replacement

* Context is irrelevant, most of the choices would be illegal as statement start

* Choices include table names, column names, and keywords.

To complete what you could figure out, you could try these additional tests:

inf [Tab] [Tab] — you’ll see information_schema because choices also include database names

BEG [Tab] [Tab] — you’ll see BEGIN because choices also include non-reserved keywords

INF [Tab] [Tab] — there will be a question whether you want to see all choices because 100+ choices may not be a useful hint.

Command line options

To back up a bit, the reason that the USE and REHASH statements might have been unnecessary is that there might be defaults.

If you started mysql with --database=database_name or -D database_name, then USE is automatic when you connect.

If you started mysql with –auto_rehash, or you just omitted the option because it is default default, then REHASH is automatic when you USE.

Do not let the “auto” in “–auto-rehash” deceive you too much though. REHASH will not happen automatically if anyone changes the current database with CREATE or DROP or ALTER. REHASH will not happen automatically if you say USE x; when x is already the default database.

So if you worry about depending on obsolete choices, you will need to do manual REHASHes occasionally.

The REHASH Statement

prompt> REHASH; /* or /# */

REHASH is not a good name — it hints about how the job is implemented, rather than what it does, which is: select the names of databases, names of tables (both base tables and viewed tables but not temporary tables) in the current database, names of columns of those tables, keywords (both reserved words and unreserved words), and names of mysql commands. Completion will not work if REHASH has not happened, not even for keywords.

REHASH will not select names of tables that you don’t have privileges for. There is a small security breach though: If you have a column-level privilege for any column in the table, then the menu choices will include all columns in the table, including the ones you don’t have privileges for. (In theory you are not supposed to know about such columns, which is why you won’t see them in information_schema.)

Unlike USE, which tells you “Reading table information for completion of table and column names”, REHASH gives no feedback. But it does succeed. If you have only a few thousand tables and columns, it takes negligible time.

What library mysql is using

For most editing operations, including [Tab] handling, the mysql client passes off the work to an open-source library, either readline or libedit.

If you have an old MySQL version, or if you have MariaDB, your mysql client probably has readline. One way to tell is to say

mysql --help | grep readline

Any non-blank response, for example

mysql Ver 15.1 Distrib 10.1.47-MariaDB, for debian-linux-gnu (x86_64) using readline 5.2

indicates that your mysql client has readline. Alternatively you could say “whereis mysql … ldd mysql-location” and look for libreadline.so in the output.

If you have MySQL 5.6.5 or later, your mysql client almost certainly has bundled libedit. (In version 5.5 there was still a CMake option -DWITH_READLINE=1, but it is gone now.) If you have MariaDB, your mysql client probably does not have libedit unless you built from source and passed the -DWITH_LIBEDIT option to cmake. One way to tell is to create a file named ~/.editrc and insert this temporary line:

mysql:bind

(If the file already exists, no problem, just add a line saying mysql:bind at the start.) Now start the mysql client. If it displays a list titled “Standard key bindings”, then your client has libedit. Specifically it is close to the NetBSD variation of libedit. Now remove the line saying mysql:bind from libedit.

It has been suggested that MySQL/Oracle’s changed preference was due more to licence considerations than to features (readline is GPL and libedit is BSD), and indeed it is true that there are more things you can do with autocompletion if you use the mysql client from MariaDB, as we will see.

Today (November 6 2020) the MySQL 8.0 manual still has mentions of readline, here and here and here. They are obsolete, ignore them.

Using a different key instead of [Tab]

The special key for completion is [Tab] so sometimes autocompletion is called “tab completion”. But it doesn’t have to be. You can change the “key binding” — the way that a particular key is connected to a particular operation — by changing a file that the library reads when you start the mysql client. There is no denying that [Tab] is the more popular choice on Linux, partly because it is the default for both libedit and readline, and some Linux utilities such as bash depend on readline. But [Tab] is a displayable character so it is unusual — and I think bizarre — to see it within an application that is editing text. There is a way to say “Let [Tab] be [Tab], and use a control character for completion.”

If the library is libreadline, then the file to change is ~/.inputrc. If it does not already exist, then you can create it, but then be sure to $include /etc/inputrc at the top, otherwise the user’s copy will just override the system one. Make it look like this:

$include /etc/inputrc Control-I: tab-insert Control-N: complete

This would also work:

$include /etc/inputrc TAB: self-insert Control-N: complete

Unless you have an exotic terminal, [Tab] and Control-I have the same effect.

If the library is libedit, then the file to change is ~/.editrc and the key binding that matters for completion is rl_complete. Edit ~/.editrc again and remove the line that says bind, and add these lines:

mysql:bind "^N" rl_complete mysql:bind "\t" ed-insert

I like it that I can specify that I only want to affect mysql.

Now start the mysql client again, and once again say

USE information_schema; REHASH; DROP TABLE

and now type control-N twice. You will see that it displays exactly the same thing that you saw when you typed [Tab] twice. However, if you now type [Tab], you will get a tab.

Warning: before changing a key binding, make sure that the key is not used by other programs or has some default libreadline/libedit binding already. And check with stty -a.

Undocumented readline features

Actually these key bindings are documented for readline,

but the MySQL and MariaDB manuals don’t list them so I’m

guessing most mysql users don’t know about them. To see what the relevant key bindings are, I use bind:

$ bind -P | grep complete

complete can be found on "\C-n", "\e\e".

complete-command can be found on "\e!".

complete-filename can be found on "\e/".

complete-hostname can be found on "\e@".

complete-into-braces can be found on "\e{".

complete-username can be found on "\e~".

complete-variable can be found on "\e$".

dynamic-complete-history can be found on "\e\C-i".

glob-complete-word can be found on "\eg".

menu-complete is not bound to any keys

menu-complete-backward is not bound to any keys

old-menu-complete is not bound to any keys

vi-complete is not bound to any keys

This is rather cryptic but you probably can guess that “\C-n” means “Control-N”, which is the suggestion that I made for ~/.inputrc. However, the interesting items are menu-complete and menu-complete-backward. Let’s bind them to some keys and see what a readline-based mysql client does with them.

First change ~/.inputrc again so now it looks like this:

$include /etc/inputrc Control-I: tab-insert Control-N: complete Control-J: menu-complete Control-K: menu-complete-backward

Now start mysql again and do some of the same steps as before:

USE information_schema; REHASH;

And now type LO and then type control-N twice

(not [Tab] any more), and you’ll see

Now type Control-J. Suddenly the word becomes the first choice

in the menu

Now type Control-J again. Now the word becomes the second choice in the menu

Keep typing Control-J and eventually readline will cycle back

to the first choice. Or type control-K and completions will be of the previous items.

This, I think, is how autocompletion should always work.

If you have a menu of choices, you should have a way to

navigate to a specific choice by typing a single key.

Try out even more behaviour changes by adding these lines at the end of ~/.inputrc:

# Case-insensitive (disabled) set completion-ignore-case On # Don't do anything until user types at least 3 characters (disabled) set completion-prefix-display-length 3 # If there are more than 5 choices ask "Display all xx possibilities?" set completion-query-items 5 # Of course disable-completion No is default set disable-completion No # If there are many choices don't show a page at a time and ask for "More" set page-completions Off # Menu options should be vertical, that would be normal (disabled) set print-completions-horizontally No # If there are two or more choices show them after hitting special key once set show-all-if-ambiguous Yes # Show what is changed (disabled) set show-all-if-unmodified On # If it is completed then put the cursor after it set skip-completed-text On # Don't hear a bell, see a bell (disabled) set bell-style visible

These are not libedit features, but I shouldn’t omit mentioning that libedit has many other key bindings and customization chances, unrelated to autocompletion. It is not hard to use MariaDB’s mysql client to connect to MySQL’s server, but I doubt it’s worthwhile just for some extra autocomplete gizmos.

In a GUI client

It should be clear that the mysql client does an adequate autocompletion job. But I can think of many clients that do a better job, from either MySQL or third parties. I am only going to illustrate one of them — our ocelotgui — but I am not suggesting that it is unique in this respect, since any competent GUI will have at least a few of these additional features. And I am not suggesting that any one of these additional features is important — only “autocompletion” itself is important. But I think that as a whole they save some time and trouble.

You should be able to follow along with your own copy of ocelotgui, if you have downloaded the latest version from github.

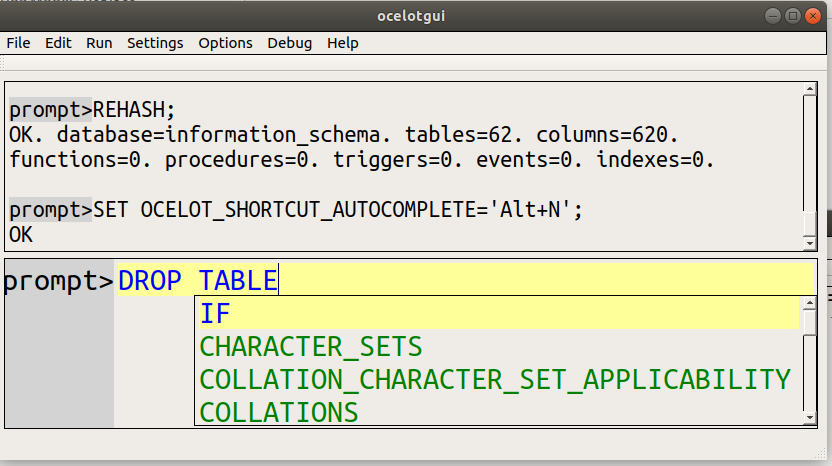

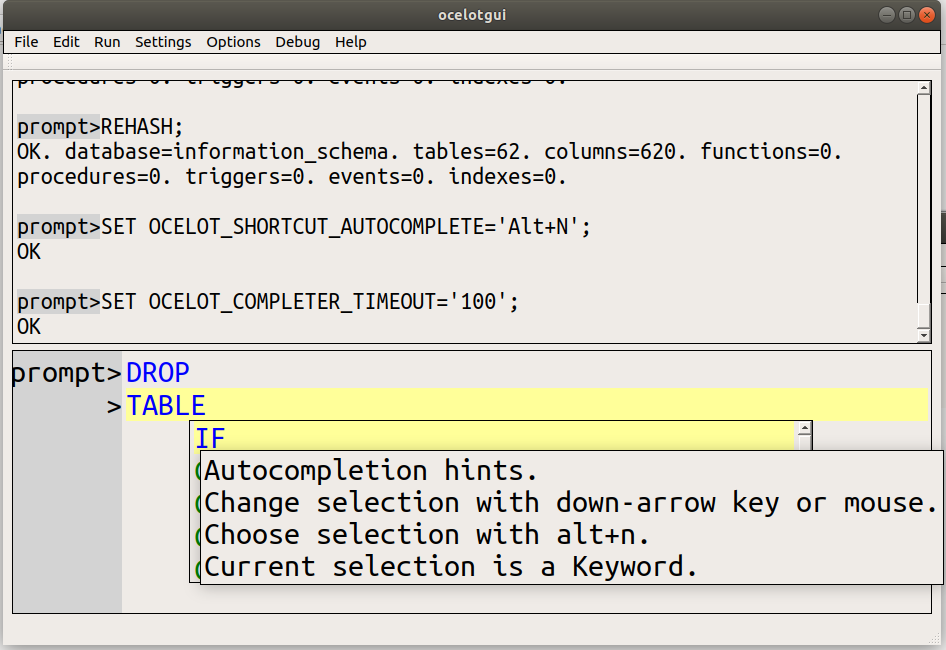

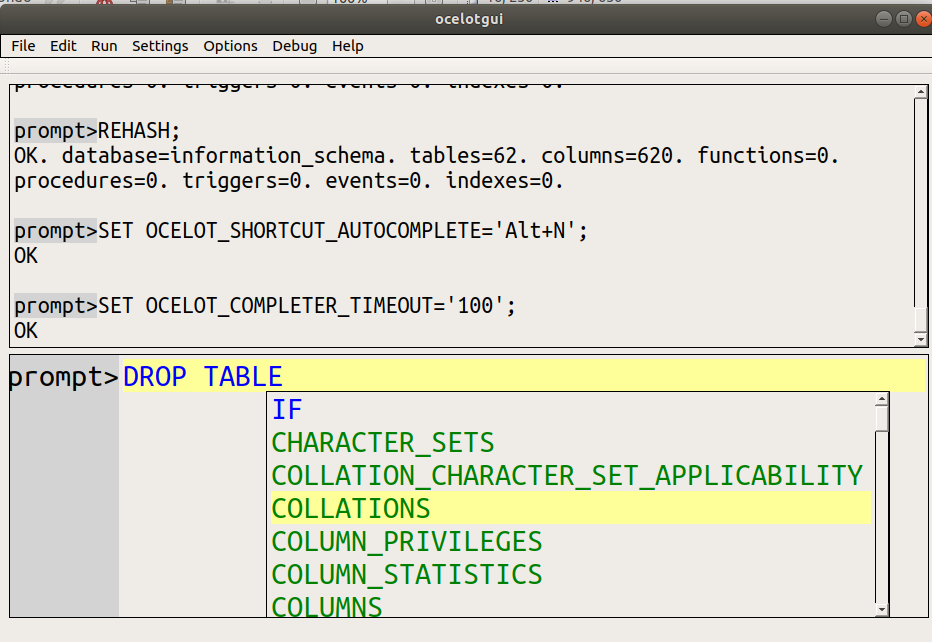

Say USE information_schema and REHASH.

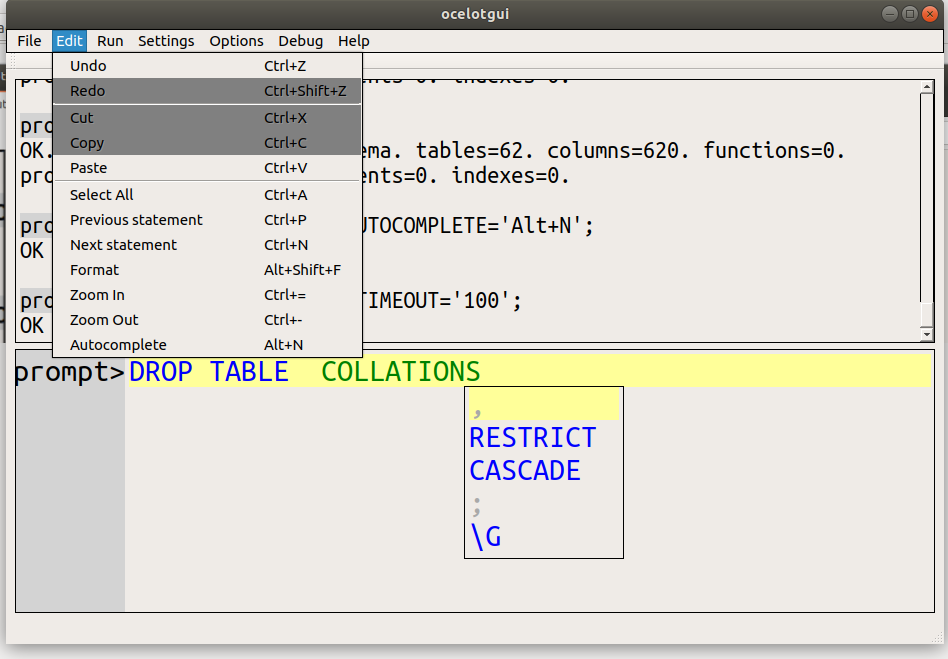

Change so completion is done with Alt-N rather than [Tab].

Move the cursor and hover, to see instructions.

Use the down-arrow to select one of the menu items.

Type Alt-N or click Autocomplete on the main Edit menu

Wait OCELOT_COMPLETER_TIMEOUT seconds for the menu to disappear.

Notice these differences …

(1) Changing [Tab] can be done within the program (although of course it can also be done by changing a file).

(2) The choices appear immediately, with a GUI there’s no need for [Tab] or a special key to make them appear.

(3) The choices include only what is relevant in context, i.e. after DROP TABLE the only possible items are IF and table names.

(4) The colour of the choices is the same as the colour of the highlighting, which is why IF (a keyword) and * (an identifier) look different.

(5) The navigation is done with arrow keys on a purely vertical menu with a scroll bar, regardless of number of choices.

(6) Hints and menu choices appear temporarily, as in IDEs.

Another difference is that this works the same way on Windows as well as Linux, out of the box.

Of course you can’t actually drop a table in information_schema, and ocelotgui will fail to warn you about that. I admit as much. “Perfection” is still on the to-do list.